Here's the result of applying Non Rigid Face Tracking on Brad, Rorie, and Vinny, while they were talking about Dark Souls 2.

Basically this computer vision algorithm goes like this:

- Apply Active Shape Model.

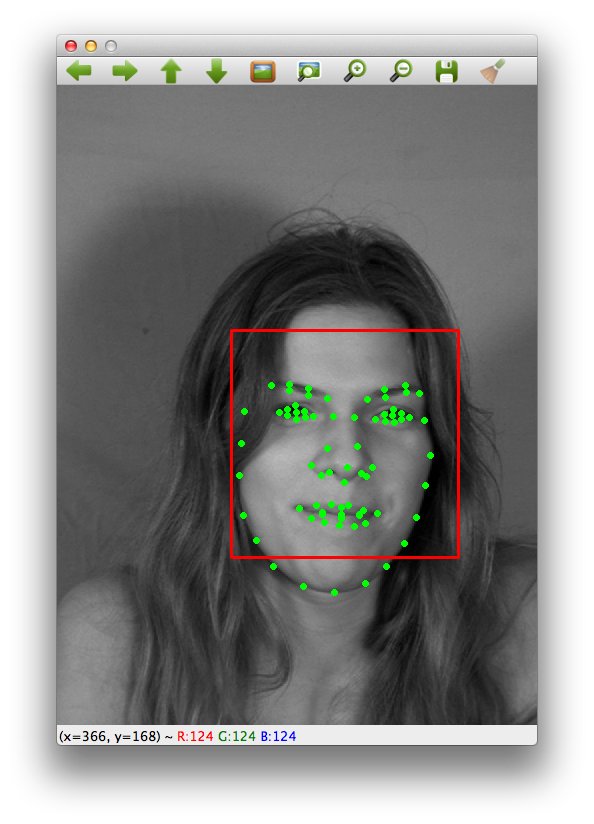

- Basically from a set of training images, in this case more than 1000 different images from MUCT database , we learn the mathematical model that approximately describes the pre-annotated facial shape of human. Here's a sample of image with pre-annotated points (also called as landmarks):

- In this case, we learn the most general shape out of the 1000+ images, which we call the canonical shape. However since the general shape is not enough to describes large number of faces (some people has taller nose, more pronounced cheek, etc), we also retain 3 standard deviations from the canonical shape. Here's the sample of learned Active Shape Model:

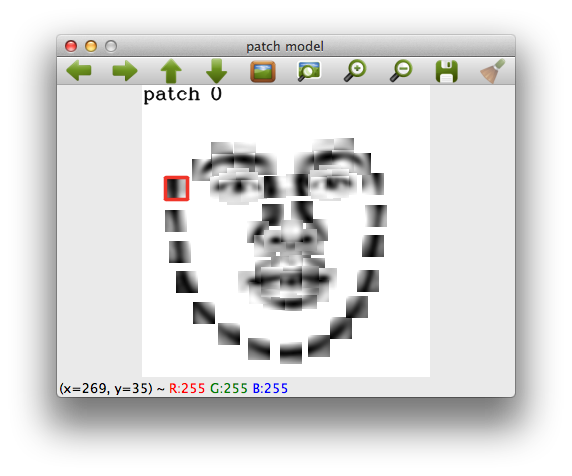

- For each pre-annotated points, we learn the correlative patch models

- Which basically means that for each landmark point, we apply stochastic gradient descent (randomly pick an image from the image set for 1000 times), in order to find the patch that gives us the best response / result.

- In layman terms, rather than learning the whole face, we try to find learn the general facial features for small region / patch of each landmark point. Here's the sample result of learned patch models

- Use Viola Jones Classifier to find the approximate head position in our test data (which is the GiantBomb video), and apply the shape and patch models to fit in the landmark points to each detected faces. Here's the result of it

It's not perfect, it depends on the lighting of the scene, requires 'non difficult' head movement. Anything that depends on Machine Learning is also based on probability, but again this is not a state of the art, there are more robust algorithms out there.

If you're interested, I have the source code here: https://github.com/subokita/Sandbox/tree/master/NonRigidFaceTracking

I have no fucking idea why I decided to post this on GiantBomb though.

Log in to comment