Anandtech review: http://www.anandtech.com/show/7457/the-radeon-r9-290x-review

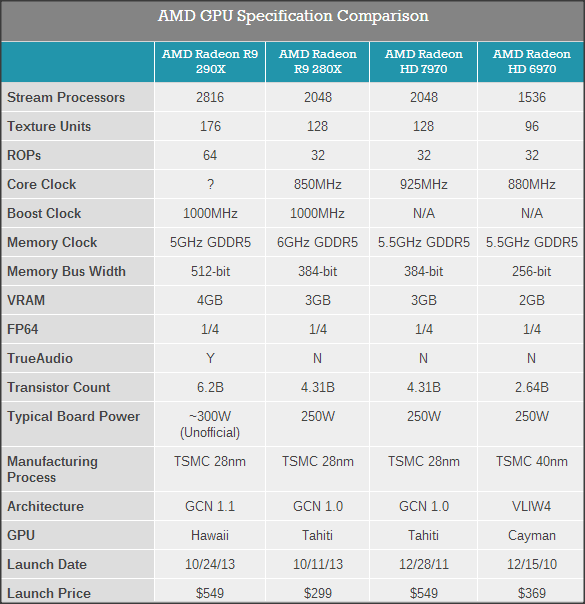

Wrapping things up, it’s looking like neither NVIDIA nor AMD are going to let today’s launch set a new status quo. NVIDIA for their part has already announced a GTX 780 Ti for next month, and while we can only speculate on performance we certainly don’t expect NVIDIA to let the 290X go unchallenged. The bigger question is whether they’re willing to compete with AMD on price.

GTX Titan and its prosumer status aside, even with NVIDIA’s upcoming game bundle it’s very hard right now to justify GTX 780 over the cheaper 290X, except on acoustic grounds. For some buyers that will be enough, but for 9% more performance and $100 less there are certainly buyers who are going to shift their gaze over to the 290X. For those buyers NVIDIA can’t afford to be both slower and more expensive than 290X. Unless NVIDIA does something totally off the wall like discontinuing GTX 780 entirely, then they have to bring prices down in response to the launch of 290X. 290X is simply too disruptive to GTX 780, and even GTX 770 is going to feel the pinch between that and 280X. Bundles will help, but what NVIDIA really needs to compete with the Radeon 200 series is a simple price cut.

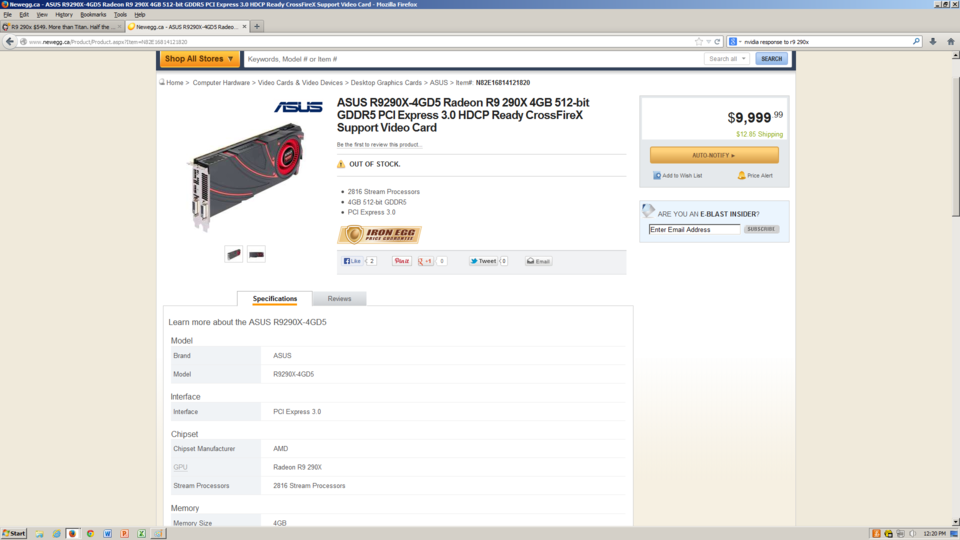

$579 with Battefield 4 at Newegg:

http://www.newegg.com/Product/Product.aspx?Item=N82E16814202058

Thoughts?

Log in to comment