It's running fine for me (GTX 770, i7) but it crashed my PC two times today.

Watch Dogs

Game » consists of 24 releases. Released May 27, 2014

- PC

- PlayStation 3

- Xbox 360

- PlayStation 4

- + 4 more

- Xbox One

- PlayStation Network (PS3)

- Wii U

- Amazon Luna

A third-person open-world game from Ubisoft, set in an alternate version of Chicago where the entire city is connected under a single network, and a vigilante named Aiden Pearce uses it to fight back against a conspiracy.

Is the game running bad on PC for anyone else?

@crommi: so you're saying I should toss my 4GB 670 back in and see if that fixes it because ubisoft can't program for 2 videocards (that's technically what my 690 is)?

Well, that is another option. Your 670 4GB would probably run the game much smoother because it has twice the ram.

That seems shitty. My 690 was a $900 card brand new. It has 4GB, I should not be restricted to half of my card. Especially when I can run BF4 maxed out at 120+ FPS

That seems shitty. My 690 was a $900 card brand new. It has 4GB, I should not be restricted to half of my card. Especially when I can run BF4 maxed out at 120+ FPS

No, it has 2GB per GPU and everything is mirrored over to GPU2's memory, so you only have 2GB of useable ram. It has a lot of rendering performance which shows in BF4 but in this case the limited memory is causing a bottleneck. If you were to run BF4 in triple-screens with high AA settings, you might also experience same kind of stuttering.

That seems shitty. My 690 was a $900 card brand new. It has 4GB, I should not be restricted to half of my card. Especially when I can run BF4 maxed out at 120+ FPS

No, it has 2GB per GPU and everything is mirrored over to GPU2's memory, so you only have 2GB of useable ram. It has a lot of rendering performance which shows in BF4 but in this case the limited memory is causing a bottleneck. If you were to run BF4 in triple-screens with high AA settings, you might also experience same kind of stuttering.

Wow, I just tried swapping back to my 670 and it ran the game at 40-60 fps with the same settings that I tried before without any issue. I don't know what to do now. Is my 690 still worth putting back in and keeping (my 670 seems to only run watch dogs and titanfall better) or should I sell it and get something else? If so what do I get that would be an upgrade?

@mrbubbles: Your 690's performance is going to vary wildly from game to game due to issues with SLI scaling. The GTX 690 is a beast, unfortunately sometimes the driver support for certain games is a little lacking. Some games don't support SLI at all, like Wolfenstein: The New Order. It's just sort of the way it is with multi-GPU setups...this is the whole reason I went from two cards to one this time around. You are almost always better off with a single more powerful GPU rather than a dual GPU card or two lower end cards in SLI or Crossfire.

You may want to try going into your Nvidia control panel and disabling SLI for Watch Dogs and see what kind of performance you get. You may be surprised at the results...some poorly optimized games actually have negative SLI scaling, and disabling one of the cards (or "half" of your 690 in this case) can actually improve performance. Reports on SLI scaling in Watch Dogs are all over the place, some people are seeing negative scaling and others are getting a 75% improvement in frame rate. There isn't a single answer to this one.

I hear that Ubisoft is working on a patch for Watch Dogs to address performance issues across the board, so maybe you'll see an improvement with that if the latest 337.88 GeForce drivers didn't help you any.

@mb: I tried disabling SLI and running it on ultra with temporal SMAA and it ran around 30fps but it was way more stable then when I tried that with SLI. I dropped the textures to high and left it at temporal SMAA and it went up to around 40fps but again it was way more stable there than with SLI. I hope this and Titanfall aren't examples of what to expect from PC games in the future. Otherwise I'd be better off selling the 690 and getting something else because I'm tired of my games running like shit at launch. If that's the case then what do I get for a great single card (if this is what to expect form SLI setups)?

@mrbubbles: It's sort of always been that way with multi-GPU configurations, and I don't see that changing anytime soon. I think that's because people who have multiple GPU's make up such a small percentage of the market, there isn't the same level of driver & developer support as there is for single cards.

Personally, I have a 3gb GTX 780 and it's a great card, they run around $500. I think that is still the best bang for your buck in single-GPU Nvidia cards. A step up from that would be the GTX 780 Ti but it's around $700 and you won't get 40% better performance out of it compared to the standard 780. If you want to go with an AMD card, look at the R9 290X at the $500 price point. Whatever you do, when you decide on a specific card make sure you read the reviews on the differences between the various manufacturers, because the same GTX 780 can have different cooling setups and overclocking overhead across the different offerings from MSI, Asus, EVGA, etc. I went with the Asus GTX 780 based on it's temperature, noise level, power draw, and clock speed benchmarks.

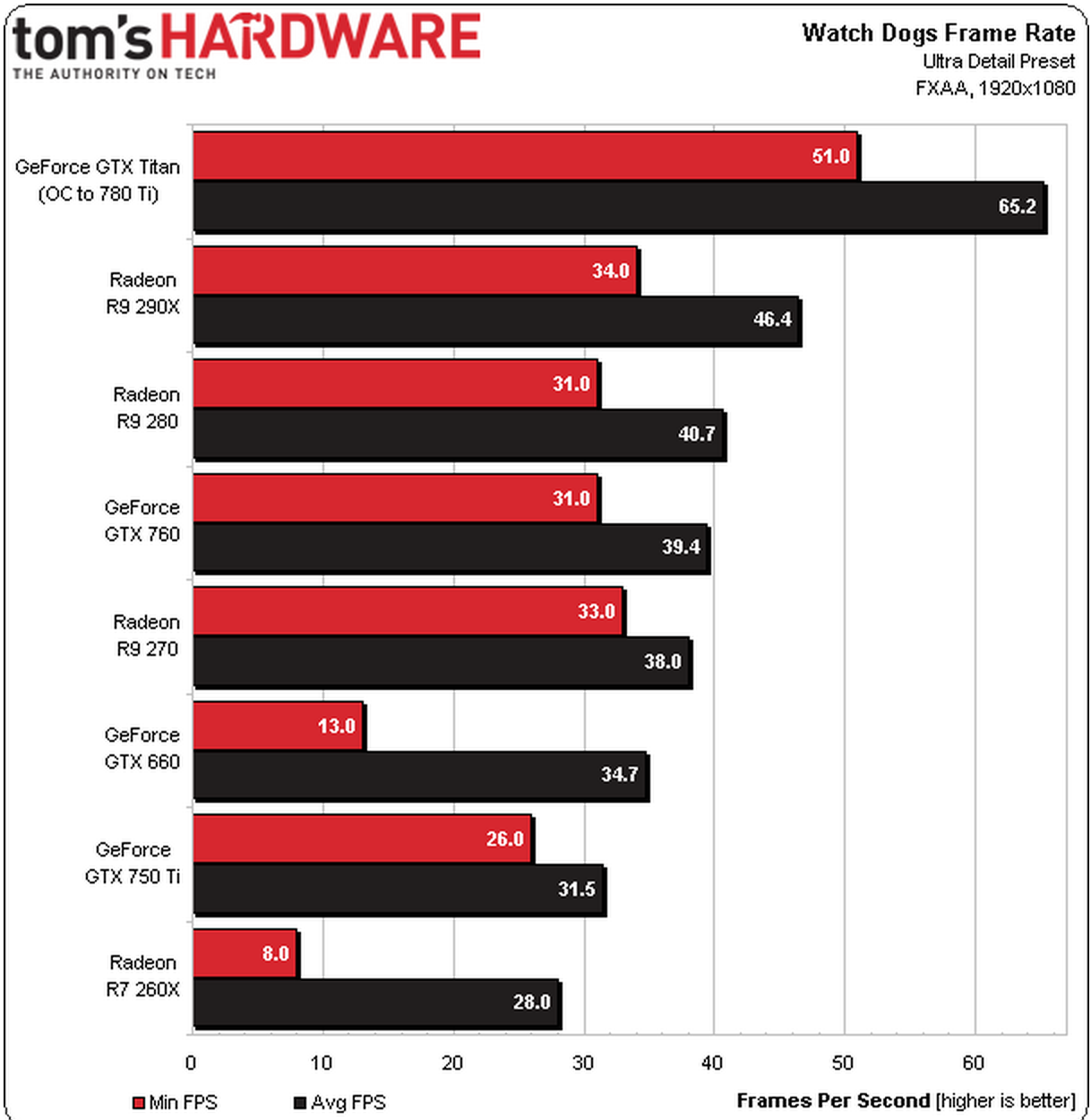

A great resource are the Tom's Hardware monthly graphics card hierarchy charts where they break down the pros and cons of each and try to rank cards by price to performance ratio. Here is May's article, I don't think June's has been released yet. Give it a read! They also did some Watch Dogs benchmarking on a bunch of different test beds...but don't buy a card having one single game in mind. You'll probably be done with it soon and be on to the next one anyway.

@mb: Thanks for the advice. I could probably get enough for that 690 (it was $1000 new) to cover the cost of a 780. I've had 4gb for my last 2 cards and just worry about missing that extra gigabyte. Is that a genuine worry or would I be fine with it?

I think 3gb is plenty for almost everything, unless you're planning on ultra high resolutions and/or multiple monitor setups.

If you have the appropriate motherboard you can always get one card now and then add a second one later on in SLI when prices come down, if you felt the need to.

I'm getting absolutely huge pop in all the time when I drive, on low quality settings.

AMD Radeon HD 6670

8GB RAM

Intel i5-3330

My friend said I should be able to run pretty much anything on high settings but I never can? Switch Watch Dogs up to high and the framerate goes to unplayable levels, even unwatchable.

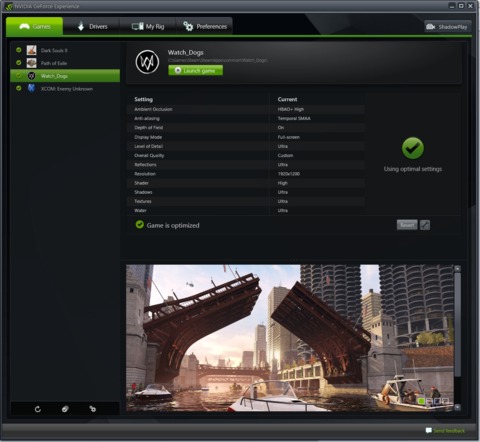

This game is poorly optimized for PC, but what I find even more ridiculous is the communication. Clearly both Nvidia and Ubisoft recommends 3GB of Video Memory for "Ultra Textures" (Indicated in the GeForce Experience and the graphic settings for the game), but it has been proven that the game uses more, even more closely to 4GB, on "Ultra Textures" in a 1920x1080 resolution with no anti aliasing. So either we have a game that uses more memory than it should (my guess) or it's intended and the communication here, for whatever reason, is incorrect.

There's probably very simple explanation for the misleading system requirements, Nvidia is the technology partner for this game and their 900$ top-end gaming card (GTX 780 Ti) comes with 3GB ram. You can't really expect Ubisoft to screw over Nvidia who provided them with tools, libraries and hardware by announcing that you need 4GB card (AMD) to run Watch_Dogs at Ultra settings.

So it is better to lie to its users? I still think there's something wrong here. The game shouldn't need over 3072MB of video memory in a 1920x1080 resolution with no anti aliasing. I have even seen people reporting that the game will use close to 5GB of memory in a 1440p resolution, It's insane. While I think the textures, for the most part, are sharp on ultra settings, it doesn't look that much better than let say Far Cry 3 which uses just about 1GB. Even The Witcher 2 has good textures in my eyes and I remember it uses under 1GB of memory. Who knows, maybe it's indeed calculatedly and people would actually need a video card with 4GB of memory to enjoy ultra textures. It would be fine for me if it said that in the beginning, but being said otherwise within multiple trusted sources are annoying and not something I approve of. Either change the requirements or optimize it.

Aside from occasional hitching when driving fast (which seems like normal loading) Watch Dogs runs fine on Ultra for me. Either I'm having the same issues as everyone else and I'm just not noticing, which is unlikely I think, or it really is running fine. My GPU (GTX 680, EVGA 04G-P4-3687-KR) has 4GB of VRAM. Maybe that's why?

After looking at the settings again, it's actually running on Custom. I can't check to see which setting(s) aren't considered Ultra right now. Maybe one of you knows just by looking at the screenshots.

@mb: Thanks for the advice. I could probably get enough for that 690 (it was $1000 new) to cover the cost of a 780. I've had 4gb for my last 2 cards and just worry about missing that extra gigabyte. Is that a genuine worry or would I be fine with it?

I also have a 690 and I honestly don't think it is a genuine worry jut yet. I run triple monitor setups and generally max everything no problem, this game has unfortunately got some issues in this department.

The x80's are always going to be the best price point for performance as well as support unfortunately. I ended up getting the 690 purely because it was basically the same cost as buying two 680's for sli at the time. I too didn't realise until later that the vram was actually capped at 2gb.

I would say don't worry too much about your 690 at this stage over this one piece of shit game, and maybe hold out for a 880 when it comes out. But then also 690's have not dropped in price very much at all in the last few years, so if you were looking at selling it, it could be better sooner than later.

@mb: Thanks for the advice. I could probably get enough for that 690 (it was $1000 new) to cover the cost of a 780. I've had 4gb for my last 2 cards and just worry about missing that extra gigabyte. Is that a genuine worry or would I be fine with it?

I also have a 690 and I honestly don't think it is a genuine worry jut yet. I run triple monitor setups and generally max everything no problem, this game has unfortunately got some issues in this department.

The x80's are always going to be the best price point for performance as well as support unfortunately. I ended up getting the 690 purely because it was basically the same cost as buying two 680's for sli at the time. I too didn't realise until later that the vram was actually capped at 2gb.

I would say don't worry too much about your 690 at this stage over this one piece of shit game, and maybe hold out for a 880 when it comes out. But then also 690's have not dropped in price very much at all in the last few years, so if you were looking at selling it, it could be better sooner than later.

This won't be an issue isolated to Watch Dogs, people are ignoring that this is the direct result of the new console gen hardware.

People wanted PC games with better high res textures and beefier requirements, now they get them.

Titanfall and Watch Dogs are just the beginning, both these games in high/ultra setting will kill any rig with less than 3 GB Vram, regardless of it being multi-GPU. Expect the same behavior from any future AAA release with special "Ultra" settings for the PC version.

A lot of cheap rigs will pretty soon end up being outdated, especially for those people who tried to save a couple of bucks, by going 2 GB instead of 4 GB on their vram. New consoles with their bigger unified memory have lead to developers just dumping large uncompressed textures into memory (that's also why those games are so huge).

People who saved on their CPU will also feel the pain, as newer games are having better support for hyperthreading, like Titanfall and Watch Dogs, while also demanding higher CPU speeds.

Both of these are things where people had to make choices, when buying their hardware these past years, and a lot of people made the cheaper choices, those cheap choices are now taking their toll.

@nethlem: I don't agree with your assessment at all. I think games like Watch_Dogs run terribly on PC because Ubisoft built it with consoles in mind and the PC port was bad - not because the new consoles are in any way better than modern, top end gaming rigs that run Watch Dogs like...dogs. I have a machine that far exceeds the recommended specs for Watch Dogs, I mean for gods sake my video card is two entire generations newer than the one recommended, and I can't even get a stable 60fps at a measly 1920x1080. This has nothing to do with the size of textures and everything to do with how poorly it was programmed.

@mb: Thanks for the advice. I could probably get enough for that 690 (it was $1000 new) to cover the cost of a 780. I've had 4gb for my last 2 cards and just worry about missing that extra gigabyte. Is that a genuine worry or would I be fine with it?

I also have a 690 and I honestly don't think it is a genuine worry jut yet. I run triple monitor setups and generally max everything no problem, this game has unfortunately got some issues in this department.

The x80's are always going to be the best price point for performance as well as support unfortunately. I ended up getting the 690 purely because it was basically the same cost as buying two 680's for sli at the time. I too didn't realise until later that the vram was actually capped at 2gb.

I would say don't worry too much about your 690 at this stage over this one piece of shit game, and maybe hold out for a 880 when it comes out. But then also 690's have not dropped in price very much at all in the last few years, so if you were looking at selling it, it could be better sooner than later.

This won't be an issue isolated to Watch Dogs, people are ignoring that this is the direct result of the new console gen hardware.

People wanted PC games with better high res textures and beefier requirements, now they get them.

Titanfall and Watch Dogs are just the beginning, both these games in high/ultra setting will kill any rig with less than 3 GB Vram, regardless of it being multi-GPU. Expect the same behavior from any future AAA release with special "Ultra" settings for the PC version.

A lot of cheap rigs will pretty soon end up being outdated, especially for those people who tried to save a couple of bucks, by going 2 GB instead of 4 GB on their vram. New consoles with their bigger unified memory have lead to developers just dumping large uncompressed textures into memory (that's also why those games are so huge).

People who saved on their CPU will also feel the pain, as newer games are having better support for hyperthreading, like Titanfall and Watch Dogs, while also demanding higher CPU speeds.

Both of these are things where people had to make choices, when buying their hardware these past years, and a lot of people made the cheaper choices, those cheap choices are now taking their toll.

Yeh because a GTX 690 is the cheaper choice.....This is still one of the most expensive cards on the market. Do your research.

Sorry mate, but I'm not buying it. This will be true to some small degree over the next few years perhaps, but I doubt its hardly suddenly a fact of "o sorry all 2gb cards are worthless now".

There is just too wide a gap between how this looks and plays compared to recent better looking games, and its ubisoft. They have pulled this with literally every Assassins Creed release.

It's not just magically next gen now so by default every new game runs bad, it doesn't work that way.

It's probably worth noting that while we are complaining about performance based on our rigs, we are still getting performance resolution and framerate better than the next gen consoles. So there is really no point in anyone coming in here and dooming it up under that agenda.

@rowr: If you want this discussion to be dead serious: Yes the GTX 690 had been the "cheaper" choice, if one wanted to have the "kick ass gaming rig" where "money doesn't matter" one would have bought two 680's with 4 GB vram and ran them in SLI, such an setup would easily eat Watch Dogs. Multi-GPU cards are a pointless waste of money and power, unless you want to go quadruple or higher SLI/CF there is absolutely no reason at all to buy these overpriced monstrosities, these cards are more about prestige than actual performance or price/performance ratio.

SLI/CF support has always been notoriously dodgy, people who buy these cards (or SLI/CF two single cards) and expect "double the performance" didn't do their homework and thus shouldn't be allowed to waste such obscene amounts of money on hardware.

2GB cards won't be "worthless" now, but don't expect to run "Ultra" setting or Anti-Aliasing on any HD resolutions with tolerable FPS in any newer releases. People have gotten too used to "just cranking it up to max" without taking any care about what their rig is actually capable of performing, now when this won't work anymore people simply start blaming software for their own cluelessness. How many of the people complaining did actually check where their hardware is bottlenecking? Is the CPU too slow? The GPU? Does any memory fill up too much? Is the GPU throttling due to thermal issues? What kind of medium is the game running from, SSD or HDD?

Nobody gives a crap or checks for these things, even tho it's exactly those things that tell the true story about the performance of the game and what's responsible for it performing badly. Knowing these things helps one making the right upgrade choices and tweaking the right settings to get the game running at desirable framerates with the best possible look.

@nethlem: I don't agree with your assessment at all. I think games like Watch_Dogs run terribly on PC because Ubisoft built it with consoles in mind and the PC port was bad - not because the new consoles are in any way better than modern, top end gaming rigs that run Watch Dogs like...dogs. I have a machine that far exceeds the recommended specs for Watch Dogs, I mean for gods sake my video card is two entire generations newer than the one recommended, and I can't even get a stable 60fps at a measly 1920x1080. This has nothing to do with the size of textures and everything to do with how poorly it was programmed.

In no way did i imply that new consoles are "more powerful than top end gaming rigs" (even tho the PS4 has a certain edge with it's 8 GB of unified GDDR5 memory), i merely pointed out that they are way more powerful compared to the previous gen hardware Xbox360/PS3, especially in terms of memory.

People have gotten too used to the performance ceiling these old consoles imposed on the majority of games, these past 5 years you could basically max out any game even with a modest mid-tier gaming rig, that's been the result of the last console gen having been around for so long.

Heck I've used a HD 5870 1GB for these past 4 years and gotten along pretty nicely in most games with mostly high settings and tolerable fps, it's been a week since i upgraded to an R9 280x 3GB (got one from ebay for 180€) because Titanfall wouldn't run too nicely and i knew that Watch Dogs would end up with very high vram requirements (like all open world games). But my HD 5870 would still run these games in mid settings with tolerable FPS, an 4 year old graphics card!

My 3 year old 2600k@4,4 GHZ will most likely be beefy enough for this whole current console gen in games, people who opted for lower end i5's gonna have to get a new CPU down the line this console gen.

A decade ago a 4 year gap in GPU upgrade would usually mean not being able to play the "newest releases" at all, and don't get me started on the times when new CPU generations meant having quadrupled power every 2 years.

This might sound harsh, but there are way too many clueless people complaining around here, statements like "My graphics card is 2 generations newer than the recommended one!" ooze with ignorance about the way PC gaming hardware works. Your graphics card could be twenty generations newer for all i care, if you bought a budget model with low memory bandwidth, memory size and shitty clockspeeds that won't help you much running any game on demanding settings, because these past few generations of graphics cards have mostly been simple rebrandings of old architectures by Nvidia and AMD.

Only a few people around here do actually troubleshoot where their shuttering issues are actually coming from, by using tools like MSI Afterburner to check vram usage or simply monitoring temps to make sure nothing gets throttled down. And surprise surprise: These people do not complain about performance issues.

The thing Ubisoft fucked up with had been the pagefile check, looks like the game checked too often for the state of the pagefile before actually writing too it when the memory is full, resulting in even worse performance in situations where performance is already shitty due to full memory, but that issue can simply be fixed with the -disablepagefilecheck start parameter. It's also likely that they have a memory leak somewhere, leading to decreased performance when the game is running for extended periods of time and especially bad performance in situations when the memory is filled up, but these kind of memory leaks are pretty common, especially among open world games with lots of assets and complex systems/interactions.

GTAIV on PC has similar insane vram requirements for that very same reason, especially if you wanted to use the custom high-res mods you'd better hope you got at least something around 4 GB of vram in your system.

But GTAIV looks like shit compared to Watch Dogs on PC, even GTA5 only has aesthetics over Watch Dogs (The GTA5 world is simply build more carefully with a lot more love for detail), but in terms of what's "going on under the hood" Watch Dogs is actually all kinds of impressive, the game just does not often show it off that great. A lot of the environment is destructible in an very impressive way, but players hardly notice it because they just sneak past the action or the action is happening so fast (driving) that a lot of the details get lost in the frenzy. For example: The first hideout you start in, the motel, try getting a 5 star cop rating there and watch how the place falls apart from gun fire over time.

Watch Dogs is not a game that looks that impressive on screenshots, it's the moving action with all the particle effects that make the game look great, on the right kind of settings.

With my above mentioned setup, (R9 x280, 2600k@4,4Ghz, 8 GB ram, SSD) i can run the game with Ultra Textures, Temp SMAA, LoD Ultra and everything else on high (except water being on medium, that's also responsible for a lot of strain on the hardware) with 30-60 fps, mostly 60 on foot and 30 while driving.

The game looks worlds apart from the PS4 version and the PS4 never even comes close to 60 fps, so much about that.

The game has started running a lot better for me since the weekend. I don't know if there's been some update pushed out on Ubi's part or what, but the issues of stuttering car chases seem like a thing of the past.

@rowr: If you want this discussion to be dead serious: Yes the GTX 690 had been the "cheaper" choice, if one wanted to have the "kick ass gaming rig" where "money doesn't matter" one would have bought two 680's with 4 GB vram and ran them in SLI, such an setup would easily eat Watch Dogs. Multi-GPU cards are a pointless waste of money and power, unless you want to go quadruple or higher SLI/CF there is absolutely no reason at all to buy these overpriced monstrosities, these cards are more about prestige than actual performance or price/performance ratio.

SLI/CF support has always been notoriously dodgy, people who buy these cards (or SLI/CF two single cards) and expect "double the performance" didn't do their homework and thus shouldn't be allowed to waste such obscene amounts of money on hardware.

2GB cards won't be "worthless" now, but don't expect to run "Ultra" setting or Anti-Aliasing on any HD resolutions with tolerable FPS in any newer releases. People have gotten too used to "just cranking it up to max" without taking any care about what their rig is actually capable of performing, now when this won't work anymore people simply start blaming software for their own cluelessness. How many of the people complaining did actually check where their hardware is bottlenecking? Is the CPU too slow? The GPU? Does any memory fill up too much? Is the GPU throttling due to thermal issues? What kind of medium is the game running from, SSD or HDD?

Nobody gives a crap or checks for these things, even tho it's exactly those things that tell the true story about the performance of the game and what's responsible for it performing badly. Knowing these things helps one making the right upgrade choices and tweaking the right settings to get the game running at desirable framerates with the best possible look.

I did my homework, at the time i bought it the single 690 was the better option than two 680's. I don't know how you came to the conclusion its the cheaper option since it would of cost me almost exactly the same amount. I'm pretty sure it beat it out in benchmarks and there were a few aesthetic reasons i forget now such as noise. Obviously there was nothing pushing two gb vram enough that it would be a worry then, and i still don't feel there is anything legitimate that is now.

I'm realistic about the performance i expect to get, especially since im running across triple monitors. So quit making shitty assumptions. I'm not getting my panties in a knot over the fact my machine doesn't destroy this. But this game doesn't look nearly as good to justify any of the performance hit and it's goddam fact across the board that this isn't running as well as expected given what they released as requirements and what the game defaults to.

All this talk about the cpu and ssd bottlenecking or whatever else, i understand your obviously annoyed at people who just throw money at a system and don't know how it works, but in this case it's been narrowed down to the few specific issues with vram usage.

You seem to be intent to make this an issue with peoples expectations to having cheaper rigs and i guess thats a fair assumption to make and for a large percentage that might be true. But the fact is this game has performance issues across all of the newest hardware completely out of line with what you are actually getting thus why these threads actually exist and why websites have gone as far as publishing stories regarding poor performance, and why ubisoft has come out and recognised it as an issue.

Your reply was to a guy asking if he needed to worry about his 690 or upgrade. The answer is fucking no, don't be so fucking ridiculous to argue it and put ideas in someones head that they need anything more than a gtx 690 right at the minute.

I did my homework, at the time i bought it the single 690 was the better option than two 680's. I don't know how you came to the conclusion its the cheaper option since it would of cost me almost exactly the same amount. I'm pretty sure it beat it out in benchmarks and there were a few aesthetic reasons i forget now such as noise. Obviously there was nothing pushing two gb vram enough that it would be a worry then, and i still don't feel there is anything legitimate that is now.

I'm realistic about the performance i expect to get, especially since im running across triple monitors. So quit making shitty assumptions. I'm not getting my panties in a knot over the fact my machine doesn't destroy this. But this game doesn't look nearly as good to justify any of the performance hit and it's goddam fact across the board that this isn't running as well as expected given what they released as requirements and what the game defaults to.

All this talk about the cpu and ssd bottlenecking or whatever else, i understand your obviously annoyed at people who just throw money at a system and don't know how it works, but in this case it's been narrowed down to the few specific issues with vram usage.

You seem to be intent to make this an issue with peoples expectations to having cheaper rigs and i guess thats a fair assumption to make and for a large percentage that might be true. But the fact is this game has performance issues across all of the newest hardware completely out of line with what you are actually getting thus why these threads actually exist and why websites have gone as far as publishing stories regarding poor performance, and why ubisoft has come out and recognised it as an issue.

Your reply was to a guy asking if he needed to worry about his 690 or upgrade. The answer is fucking no, don't be so fucking ridiculous to argue it and put ideas in someones head that they need anything more than a gtx 690 right at the minute.

You did not do your homework, you are still not even trying to do it!

Two 680's with 4 GB (ON EACH CARD) end up being more expensive than a single 690, because the 4 GB versions of the cards cost a small premium. Sure, if you compare the 690 to two 680's 2 GB, then the price ends up being around the same, but 2 GB of vram had been a questionable choice for a while, especially for full hd+ resolutions, multimonitor setups and/or anti aliasing, all of these take a very heavy toll on the vram, even more so if you combine them.

You are in no way being "realistic" here with statements like "You don't need much vram!" while running a multi-monitor setup, previously you even admitted that you didn't know that the 690's "4 GB vram" only count as 2 GB, until after you actually bought the card, bad homework from which you seem to have learned nothing. One can never have enough vram, especially if one plans on making actual use of PC features like 4k resolution, multi-monitor setups or anti-aliasing filters, granted the GPU is actually fast enough to fill up that vram.

Just because somebody spent a lot of money on that 690 doesn't mean that it had been the best (aka most future proof choice), it's not the amount of money that matters but what you spent it on, you don't need to buy all the most expensive "high end" models, the performance editions (one level below that) usually deliver the most bang for the buck value while usually being pretty future proof.

It also doesn't matter "what the game defaults to", if the "default" does not fit you then you are free to change the settings to customize how the game looks and runs to your likening, welcome to PC gaming.It's also not an "fact" that the game "isn't running well across the board", it simply isn't running well for people that had the wrong expectations out of their hardware (Like 4 GB 690's with perfect SLI scaling, yeah..) and are clueless about hardware in general. I know this sounds offensive to a lot of people who consider themselves some "master race PC gamer" because they spent 2000+ bucks on their rigs, but being offensive is not my intention, my intention being educational.

Because there is solid proof that a lot of the issues boil down to lack of vram and HT in a ton of setups, Watch Dogs performance profits immensely from having a beefy CPU with HT and a GPU with a lot of vram, PC Games Hardware (these people are considered among the most competent in regards to PC gaming hardware) has a lengthy in-depth article on Watch Dog's performance and how different hardware combinations perform. Sadly it's in German and a lot of the actual conclusions from the benchmarks are written in the text. People tend often just to look at the graphs and interpret them the wrong way, "Uhh look at the shitty fps even a Titan has!" while ignoring that the benchmark has been run with supersampling enabled representing the "worst case scenario", just looking at individual graphs, without seeing them in the overall context, is a pretty weak way for judging performance of hardware or software.

I've been building my own gaming rigs for close to 20 years now, i do not simply talk out of my ass with the stuff i'm writing here. Hyper threading and vram are the two things a lot of people cheaped out in when they upgraded their rig these past years, because back then everybody would say "No game needs so much vram, no game supports HT, save the money don't buy it!", a lot of people are giving this very same advice to this day. But that advice had been wrong (for the past 3 years) and will be even more wrong the more "next gen" games get released. A lot of them will support HT, leading to nice performance boosts, while also requiring a lot of vram for high res textures.

Just to put this "jump" into a comparable number: The PS3 had 256 MB of vram, the PS4 can, theoretically, go up to 8 GB of vram, this increase in performance now also tickles down on the requirements for the PC versions of games, which is kind of nice considering that PC gaming has become kind of stale these past years. Barely any games had been really "demanding" enough for the hardware, with the exception of running them in Ultra HD resolutions and/or anti-aliasing and in those scenarios a lot of the GPU's end up being simply overwhelmed.

But telling people that 2 GB of vram, right now, are in some way or shape "future proof" is simply lying to people, especially if those people are planing on using "Ultra" texture setting or AA on any newer release.

I uninstalled that piece of shit.

Ubisoft clearly didn't give a shit about optimising this game, why should I give two shits about playing it. It's not even a good game. My PC goes above and beyond the system requirements for ultra.

Please enlighten us about the specs of your PC, otherwise your comment only adds to the point and clueless drivel, of which we already have more than enough around here.

My guess: Low vram, no HT on CPU (or AMD CPU) and/or Multi-GPU setup, right?

All this is kind of sad considering how simple it actually is: Affordable, 60 fps, Ultra details, you can only have two of those, but not all 3 at the same time and even the latter two are never really guaranteed and more of an gamble, due to crappy multi-GPU support throughout the industry. Btw you should blame AMD and NVidia for that and not game developers, the drivers are responsible for proper support of multi-GPU setups, as it should be. Outsourcing that responsibility to game developers has never worked and will never work, because barely any development studio has the budget to get themselves all kinds of high-end multi-GPU setups just to optimize for them, even if money wouldn't be an issue, supply often ends up being the real issue with these cards that have been build in comparable limited numbers.

I uninstalled that piece of shit.

Ubisoft clearly didn't give a shit about optimising this game, why should I give two shits about playing it. It's not even a good game. My PC goes above and beyond the system requirements for ultra.

Please enlighten us about the specs of your PC, otherwise your comment only adds to the point and clueless drivel, of which we already have more than enough around here.

My guess: Low vram, no HT on CPU (or AMD CPU) and/or Multi-GPU setup, right?

All this is kind of sad considering how simple it actually is: Affordable, 60 fps, Ultra details, you can only have two of those, but not all 3 at the same time and even the latter two are never really guaranteed and more of an gamble, due to crappy multi-GPU support throughout the industry. Btw you should blame AMD and NVidia for that and not game developers, the drivers are responsible for proper support of multi-GPU setups, as it should be. Outsourcing that responsibility to game developers has never worked and will never work, because barely any development studio has the budget to get themselves all kinds of high-end multi-GPU setups just to optimize for them, even if money wouldn't be an issue, supply often ends up being the real issue with these cards that have been build in comparable limited numbers.

GTX 770 4GB, 3770k, 16GB Ram. SSD.

I wouldn't complain unless I knew I had grounds too. Fine, I might not be able to play it on all ultra, but it should STILL run better than this.

@unilad: Unfortunately it's a problem with the code. I have a similar setup to yours with only a 2GB video card, and a Radeon at that, and the game runs pretty much flawlessly on High when I put the AA down lower. It's a shame since Watch Dogs really is a fun game, but poor performance can ruin that real quick.

It's true though that most games from the Ubisoft stable that I've played recently have ran way worse than they had any right to.

@nethlem: I don't agree with your assessment at all. I think games like Watch_Dogs run terribly on PC because Ubisoft built it with consoles in mind and the PC port was bad - not because the new consoles are in any way better than modern, top end gaming rigs that run Watch Dogs like...dogs. I have a machine that far exceeds the recommended specs for Watch Dogs, I mean for gods sake my video card is two entire generations newer than the one recommended, and I can't even get a stable 60fps at a measly 1920x1080. This has nothing to do with the size of textures and everything to do with how poorly it was programmed.

Exactly.

@unilad: Unfortunately it's a problem with the code. I have a similar setup to yours with only a 2GB video card, and a Radeon at that, and the game runs pretty much flawlessly on High when I put the AA down lower. It's a shame since Watch Dogs really is a fun game, but poor performance can ruin that real quick.

It's true though that most games from the Ubisoft stable that I've played recently have ran way worse than they had any right to.

Especially, for how much these games cost!

@unilad: Well they cost the same as they have for the past 8 years so that's not really a huge issue for me. I do wish developers put more time into making sure PC ports run well, and unlike most people I don't think it's Nvidias or AMD's fault - it's the developer responsible for making sure their product runs well on existing hardware out there. If you don't have the resources to test your game on a sufficient number of hardware configurations and optimize the experience for the users then simply don't release it on PC at all.

Please enlighten us about the specs of your PC, otherwise your comment only adds to the point and clueless drivel, of which we already have more than enough around here.

My guess: Low vram, no HT on CPU (or AMD CPU) and/or Multi-GPU setup, right?

All this is kind of sad considering how simple it actually is: Affordable, 60 fps, Ultra details, you can only have two of those, but not all 3 at the same time and even the latter two are never really guaranteed and more of an gamble, due to crappy multi-GPU support throughout the industry. Btw you should blame AMD and NVidia for that and not game developers, the drivers are responsible for proper support of multi-GPU setups, as it should be. Outsourcing that responsibility to game developers has never worked and will never work, because barely any development studio has the budget to get themselves all kinds of high-end multi-GPU setups just to optimize for them, even if money wouldn't be an issue, supply often ends up being the real issue with these cards that have been build in comparable limited numbers.

Christ, you're a condescending motherfucker.

GTX 770 4GB, 3770k, 16GB Ram. SSD.

I wouldn't complain unless I knew I had grounds too. Fine, I might not be able to play it on all ultra, but it should STILL run better than this.

Still "run better than this" based on what? You have to realize that you are throwing highly subjective terms around here. How did it run on what settings? Please define "STILL run better" in an way that's actually meaningful, otherwise your are sadly just writing words for the sake of writing words. Did you honestly expect any current gen open world game to easily run on constant 60 fps, while not looking like complete shit?

And yes i might be condescending, but this whole "drama" is just so hilarious... one side of the web is complaining that Watch Dogs is favoring Nvidia too much, due to shitty performance on AMD cards, while over here people with Nvidia cards are complaining about "not enough performance".

When in reality the issue simply boils down to people running the game with too high of an texture resolution and/or anti-aliasing while not having enough vram to support these kinds of settings. A lot of the people with "massive performance issues" would simply need to drop down texture quality and/or AA one notch to bring the game from "unplayable" to "rock solid". Of course you can just ignore all technical realities and pretend that "the game is badly optimized" after you tried to cram ultra textures with AA into those 2 GB vram of your GPU, which in turn leads to the game outsourcing assets to the swapfile on the harddrive (which ends up being an super slow HDD for a lot of people) once the vram is full and that's what is killing the performance so heavily for a lot of people. Or just to quote Sebastien Viard, the game's Graphics Technical Director:

“Making an open world run on [next-generation] and [current-generation] consoles plus supporting PC is an incredibly complex task.” He goes on to to say that Watch Dogs can use 3GB or more of RAM on next-gen consoles for graphics, and that “your PC GPU needs enough Video Ram for Ultra options due to the lack of unified memory.” Indeed, Video RAM requirements are hefty on PC, especially when cranking up the resolution beyond 1080p."

That last part is especially important, even tho way too many people believe it does not apply to them: "especially when cranking up the resolution beyond 1080p", this also applies when you enable anti-aliasing while running on 1080p, in essence AA is an resolution boost. That's also the reason why AA is among the first things you disable/tone down when something runs extremely shitty, it's seriously resource heavy on the GPU/vram. But too many people refuse to tone down the AA because they've gotten too used to Xbox360/PS3 era style games where current PC tech had been heavily unchallenged and thus people could just "crank it up to the max" without many issues.

But it's not really that complicated...

Just get Fraps to keep track of your fps and MSI Afterburner to keep track of your computers resources, once these two run you start looking for the settings that match your setup. Is your GPU not on 100% load? Crank up some visual detail! Is your vram filling up to the brim? Tone down some of these details (Textures, resolution and AA fill up vram)! Is your CPU actually busy doing something or is your GPU bottlenecking it? All these things matter in regards to the gaming performance of an individual rig.

I can run this game with a 3 year old mid-range CPU and an current gen mid-range AMD GPU on nearly "max settings", while still going into the 60 fps (depending on scene), please tell me more about that "poor performance", because i certainly can't witness anything like that over here. Is it the best optimized game ever? Nope not really, open world games rarely are, but too many people are making a mountain out of a molehill, because they simply refuse to tone down their visual settings just one notch.

These people won't have much fun in the years to come, for the next 1-2 years they might still be able to blame "bad optimization/console ports", but sooner or later the 8 GB unified GDDR5 memory inside the PS4 will take their toll on the requirements for future PC versions of these games, it's called progress and I'm damn happy that we are finally having some again.

Or have people already forgotten that even Epic wanted to have more memory in the next gen consoles? Last console gen had been heavily bottlenecked by it's memory, thus in turn nobody did really make great use with all the extra memory that's present in most PC's. This gen isn't about actual computing power (we didn't have super large jumps in the department), it's all about memory size (there we had large jumps due to the prevalence of SSD's, memory chips have become cheap).

@nethlem: I think you're overestimating the impact the PS4's GDDR5 is going to have on games...the new consoles are SOC's. No amount of GDDR5 in the world is going to make up for having a weak SOC GPU that needs to run on low power and be ultra quiet versus a discrete, fully powered graphics card.

Please enlighten us about the specs of your PC, otherwise your comment only adds to the point and clueless drivel, of which we already have more than enough around here.

My guess: Low vram, no HT on CPU (or AMD CPU) and/or Multi-GPU setup, right?

All this is kind of sad considering how simple it actually is: Affordable, 60 fps, Ultra details, you can only have two of those, but not all 3 at the same time and even the latter two are never really guaranteed and more of an gamble, due to crappy multi-GPU support throughout the industry. Btw you should blame AMD and NVidia for that and not game developers, the drivers are responsible for proper support of multi-GPU setups, as it should be. Outsourcing that responsibility to game developers has never worked and will never work, because barely any development studio has the budget to get themselves all kinds of high-end multi-GPU setups just to optimize for them, even if money wouldn't be an issue, supply often ends up being the real issue with these cards that have been build in comparable limited numbers.

Christ, you're a condescending motherfucker.

GTX 770 4GB, 3770k, 16GB Ram. SSD.

I wouldn't complain unless I knew I had grounds too. Fine, I might not be able to play it on all ultra, but it should STILL run better than this.

Still "run better than this" based on what? You have to realize that you are throwing highly subjective terms around here. How did it run on what settings? Please define "STILL run better" in an way that's actually meaningful, otherwise your are sadly just writing words for the sake of writing words. Did you honestly expect any current gen open world game to easily run on constant 60 fps, while not looking like complete shit?

And yes i might be condescending, but this whole "drama" is just so hilarious... one side of the web is complaining that Watch Dogs is favoring Nvidia too much, due to shitty performance on AMD cards, while over here people with Nvidia cards are complaining about "not enough performance".

When in reality the issue simply boils down to people running the game with too high of an texture resolution and/or anti-aliasing while not having enough vram to support these kinds of settings. A lot of the people with "massive performance issues" would simply need to drop down texture quality and/or AA one notch to bring the game from "unplayable" to "rock solid". Of course you can just ignore all technical realities and pretend that "the game is badly optimized" after you tried to cram ultra textures with AA into those 2 GB vram of your GPU, which in turn leads to the game outsourcing assets to the swapfile on the harddrive (which ends up being an super slow HDD for a lot of people) once the vram is full and that's what is killing the performance so heavily for a lot of people. Or just to quote Sebastien Viard, the game's Graphics Technical Director:

“Making an open world run on [next-generation] and [current-generation] consoles plus supporting PC is an incredibly complex task.” He goes on to to say that Watch Dogs can use 3GB or more of RAM on next-gen consoles for graphics, and that “your PC GPU needs enough Video Ram for Ultra options due to the lack of unified memory.” Indeed, Video RAM requirements are hefty on PC, especially when cranking up the resolution beyond 1080p."

That last part is especially important, even tho way too many people believe it does not apply to them: "especially when cranking up the resolution beyond 1080p", this also applies when you enable anti-aliasing while running on 1080p, in essence AA is an resolution boost. That's also the reason why AA is among the first things you disable/tone down when something runs extremely shitty, it's seriously resource heavy on the GPU/vram. But too many people refuse to tone down the AA because they've gotten too used to Xbox360/PS3 era style games where current PC tech had been heavily unchallenged and thus people could just "crank it up to the max" without many issues.

But it's not really that complicated...

Just get Fraps to keep track of your fps and MSI Afterburner to keep track of your computers resources, once these two run you start looking for the settings that match your setup. Is your GPU not on 100% load? Crank up some visual detail! Is your vram filling up to the brim? Tone down some of these details (Textures, resolution and AA fill up vram)! Is your CPU actually busy doing something or is your GPU bottlenecking it? All these things matter in regards to the gaming performance of an individual rig.

I can run this game with a 3 year old mid-range CPU and an current gen mid-range AMD GPU on nearly "max settings", while still going into the 60 fps (depending on scene), please tell me more about that "poor performance", because i certainly can't witness anything like that over here. Is it the best optimized game ever? Nope not really, open world games rarely are, but too many people are making a mountain out of a molehill, because they simply refuse to tone down their visual settings just one notch.

These people won't have much fun in the years to come, for the next 1-2 years they might still be able to blame "bad optimization/console ports", but sooner or later the 8 GB unified GDDR5 memory inside the PS4 will take their toll on the requirements for future PC versions of these games, it's called progress and I'm damn happy that we are finally having some again.

Or have people already forgotten that even Epic wanted to have more memory in the next gen consoles? Last console gen had been heavily bottlenecked by it's memory, thus in turn nobody did really make great use with all the extra memory that's present in most PC's. This gen isn't about actual computing power (we didn't have super large jumps in the department), it's all about memory size (there we had large jumps due to the prevalence of SSD's, memory chips have become cheap).

Watch Dogs isn't Crysis.

It isn't groundbreaking. It is a game meant for a mass market appeal. It should run great on most PC's. Whilst you can have an niche game with bad optimisation, there is NO excuse for creating a game, knowingly market it widely, then sell a shitty unoptimised PC version.

It's a disgrace. I cannot believe people give Ubisoft a break.

I'm guessing the performance fix isn't out yet because I still have to play on high.

i7 4770k

SLI 770's

One of my workmates has played it on PC and claims it runs like trash on his. Not sure his exact spec. But I know it runs battlefield 1080 60

@colourful_hippie: No performance fix will give you "Ultra textures" running on a decent framerate with those 770's and their 2 GB vram, sorry but there is nothing Ubisoft could possibly "patch" about you simply not having enough memory.

@unilad: You are right in that Watch Dogs is no Crysis, as these games don't even share the same genre, but it is a "Crysis" in the regard that it's making use of 3+ GB vram setups and HT CPU's. '

What made Crysis so "special" during it release that it basically killed every GPU you threw at it (Sounds familiar?) and you best brought a multi core CPU to the ride if you wanted to have any decent fun.

The game works well on medium settings on most setups, that's where "mass market" is actually located on the performance spectrum. But when the "mass market" expects to max out any new game, regardless of the actual performance of a setup, then the "mass market" has to be gotten pretty damn stupid and clueless.

@nethlem: I think you're overestimating the impact the PS4's GDDR5 is going to have on games...the new consoles are SOC's. No amount of GDDR5 in the world is going to make up for having a weak SOC GPU that needs to run on low power and be ultra quiet versus a discrete, fully powered graphics card.

Not overestimating at all, at the end of this current console gen (PS4/Xbox One) the average vram on gamer GPU's will be around 6+ GB, i'm willing to bet money on that (and most likely will lose as this console gen could also end up crashing and burning pretty soon). I already quoted Epic and Sebastien Vierd, in the above linked article, also goes into details about this, it's all about streaming from the memory.

A SOC might never be able to compete with the raw computing power of an dedicated GPU, as the SOC has to dedicate some of it's performance for tasks that are usually handled by a dedicated CPU in a PC.

But what you ignore is that an SOC is removing another bottleneck, the one between CPU and GPU, that's also why the large unified memory is so important. The PS4 basically preloads all the required assets into the memory, without having the need to "compute" them just in the moment it needs them.

And while a gaming PC might have 8+ GB dedicated system memory, it's only DDR3 which is kind of slow compared to GDDR5, in that regard a PC architecture also has a lot of more possible bottlenecks (System ram -> CPU -> GPU -> Vram (with all kinds of bridges between them) vs SOC -> System ram/vram) that's why it's easy to underestimate how much actual "CPU power" SOC's can produce, while still managing to keep a lot of free performance for GPU tasks.

Look, it's not like i'm claiming something unthinkable or never before suggested here: The long lasting 360/PS3 console gen has had PC gaming hardware requirements bottlenecked for quite a while, that's also the reason PC gaming got especially "cheap" these past years. Games that made "full use", out of the box, of the available high-end hardware just for "shiny stuff" had been very few these last years. Sure you can always crush your hardware by throwing impossible amounts of anti aliasing at it to kill your GPU with any game, but that's not really an useful benchmark for the actual performance increases (in term of new hardware and how much it actually had been better) we've had these past years.

This new console gen is way more "PC like" than many think, that's why in turn we get higher PC requirements as the "base console version" will be more demanding from the very start, so an appropriate PC version will end up even more demanding compared to 360/PS3 ports (reminder: 256 MB vram), it's the only logical course of things that in turn the "base performance" of gaming PC's has to rise over time until it hits another pseudo imposed "console ceiling".

Seriously.

Condescending is one word for it.

Mr fucking know it all is pretty happy to talk all day telling us things we know and that there's obviously a single upgrade path we all should of taken, when the simple matter is like you say, that is should run better than what it is.

When i'm telling things that "we know", how come you did chose a shitty upgrade path? There also is not "a single upgrade path we all should of taken", there simply have been choices made in the past which had been the wrong ones. Look, i also frequent some hardware related forums and over there it's always been the same story with "Need help with gaming build!" threads. They result in discussions over the amount of vram required for a setup and people always skimping out on the extra vram because "no game ever uses it".

Now is the time when mainstream AAA games actually start using said extra vram, without using any third party mods, and people with the cheaper and smaller vram versions get angry at software for filling their smaller memory too fast, while the people with the extra vram are happy they can finally fill it up with something like ultra textures, it's all kind of ironic.

The game runs fine for me, but only after getting past the hot garbage that is uplay. Gameplay itself is silky smooth.

Proc: Intel i7-3770k 3.5 Ghz

Video Card: Asus GTX 670 DirectCu II 2 GB

Ram: 16 GB Kingston HyperX Genesis 4X 4GB

OS: Windows 8 Pro 64 Bit

@colourful_hippie: No performance fix will give you "Ultra textures" running on a decent framerate with those 770's and their 2 GB vram, sorry but there is nothing Ubisoft could possibly "patch" about you simply not having enough memory.

@unilad: You are right in that Watch Dogs is no Crysis, as these games don't even share the same genre, but it is a "Crysis" in the regard that it's making use of 3+ GB vram setups and HT CPU's. '

What made Crysis so "special" during it release that it basically killed every GPU you threw at it (Sounds familiar?) and you best brought a multi core CPU to the ride if you wanted to have any decent fun.

The game works well on medium settings on most setups, that's where "mass market" is actually located on the performance spectrum. But when the "mass market" expects to max out any new game, regardless of the actual performance of a setup, then the "mass market" has to be gotten pretty damn stupid and clueless.

@nethlem: I think you're overestimating the impact the PS4's GDDR5 is going to have on games...the new consoles are SOC's. No amount of GDDR5 in the world is going to make up for having a weak SOC GPU that needs to run on low power and be ultra quiet versus a discrete, fully powered graphics card.

Not overestimating at all, at the end of this current console gen (PS4/Xbox One) the average vram on gamer GPU's will be around 6+ GB, i'm willing to bet money on that (and most likely will lose as this console gen could also end up crashing and burning pretty soon). I already quoted Epic and Sebastien Vierd, in the above linked article, also goes into details about this, it's all about streaming from the memory.

A SOC might never be able to compete with the raw computing power of an dedicated GPU, as the SOC has to dedicate some of it's performance for tasks that are usually handled by a dedicated CPU in a PC.

But what you ignore is that an SOC is removing another bottleneck, the one between CPU and GPU, that's also why the large unified memory is so important. The PS4 basically preloads all the required assets into the memory, without having the need to "compute" them just in the moment it needs them.

And while a gaming PC might have 8+ GB dedicated system memory, it's only DDR3 which is kind of slow compared to GDDR5, in that regard a PC architecture also has a lot of more possible bottlenecks (System ram -> CPU -> GPU -> Vram (with all kinds of bridges between them) vs SOC -> System ram/vram) that's why it's easy to underestimate how much actual "CPU power" SOC's can produce, while still managing to keep a lot of free performance for GPU tasks.

Look, it's not like i'm claiming something unthinkable or never before suggested here: The long lasting 360/PS3 console gen has had PC gaming hardware requirements bottlenecked for quite a while, that's also the reason PC gaming got especially "cheap" these past years. Games that made "full use", out of the box, of the available high-end hardware just for "shiny stuff" had been very few these last years. Sure you can always crush your hardware by throwing impossible amounts of anti aliasing at it to kill your GPU with any game, but that's not really an useful benchmark for the actual performance increases (in term of new hardware and how much it actually had been better) we've had these past years.

This new console gen is way more "PC like" than many think, that's why in turn we get higher PC requirements as the "base console version" will be more demanding from the very start, so an appropriate PC version will end up even more demanding compared to 360/PS3 ports (reminder: 256 MB vram), it's the only logical course of things that in turn the "base performance" of gaming PC's has to rise over time until it hits another pseudo imposed "console ceiling".

Seriously.

Condescending is one word for it.

Mr fucking know it all is pretty happy to talk all day telling us things we know and that there's obviously a single upgrade path we all should of taken, when the simple matter is like you say, that is should run better than what it is.

When i'm telling things that "we know", how come you did chose a shitty upgrade path? There also is not "a single upgrade path we all should of taken", there simply have been choices made in the past which had been the wrong ones. Look, i also frequent some hardware related forums and over there it's always been the same story with "Need help with gaming build!" threads. They result in discussions over the amount of vram required for a setup and people always skimping out on the extra vram because "no game ever uses it".

Now is the time when mainstream AAA games actually start using said extra vram, without using any third party mods, and people with the cheaper and smaller vram versions get angry at software for filling their smaller memory too fast, while the people with the extra vram are happy they can finally fill it up with something like ultra textures, it's all kind of ironic.

o my fucking god will you listen to yourself.

Good news everyone!

After the great results with Watch Dogs PC version, Ubisoft and Nvidia are extending their partnership and their upcoming AAA-titles will all be incorporating Nvidia GameWorks to deliver "amazing experiences" for the PC. Act fast and you can still get your Nvidia Titan Z so you're ready to experience the upcoming "Assassin's Creed Unity" and "Far Cry 4" in the way they're meant to be played.

Source: NVIDIA GameWorks To Enhance Assassin’s Creed Unity, Far Cry 4, The Crew & Tom Clancy’s The Division

(sarcasm)

So after stabilizing for the entire game, for some reason the game totally chugged along during Act 5. Which is possibly the worst point for the game to start chugging along.

So, this seems to be a thing now...

Watch_Dogs original graphical effects (E3 2012/13) found in game files [PC]

@fattony12000: This is crazy. Anyone tried the patch yet? Good thing I waited before playing this on the PC.

1.03.471 patch out now and guess what, it didn't do... pardon my language... fuck with the performance. I really enjoy this game, but Ubisoft seriously needs to get their shit together. As it stands now it's a hot stuttering mess. Maybe you are in for better luck than me with this update, it should be just around 50MB on Steam.

Yeah, downloaded this huge, apparently 14 gb patch, and guess what? It didn't do jack shit. It actually seemed to make the stuttering worse on my PC. My PC that has16 gb of ram and a GTX 780ti. On mediumsettings...

I am so, so glad I got this game for free with my graphics card. Feel bad for anybody whose cash Ubisoft outright stole. I've never boycotted a company before, but Ubisoft will officially never see another dime of my money. It's just become increasingly clear with every new PC release that they simply don't give a shit.

Please Log In to post.

This edit will also create new pages on Giant Bomb for:

Beware, you are proposing to add brand new pages to the wiki along with your edits. Make sure this is what you intended. This will likely increase the time it takes for your changes to go live.Comment and Save

Until you earn 1000 points all your submissions need to be vetted by other Giant Bomb users. This process takes no more than a few hours and we'll send you an email once approved.

Log in to comment