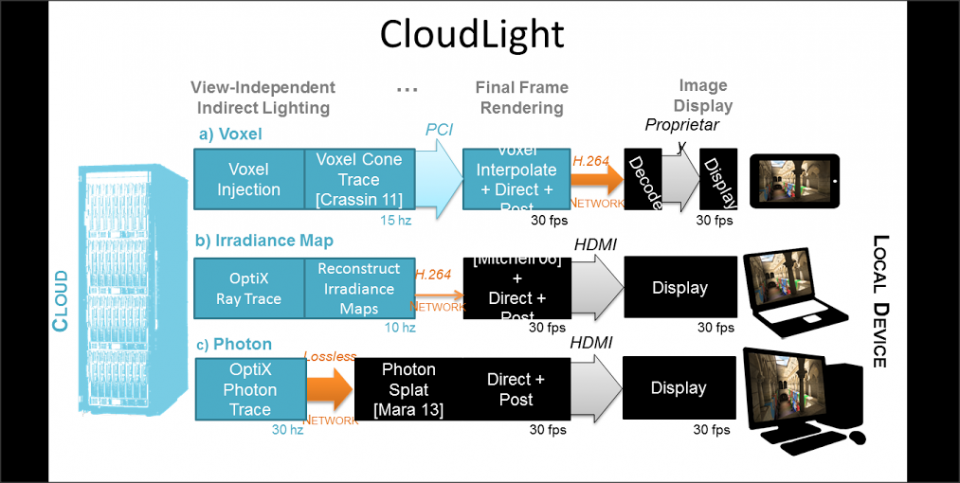

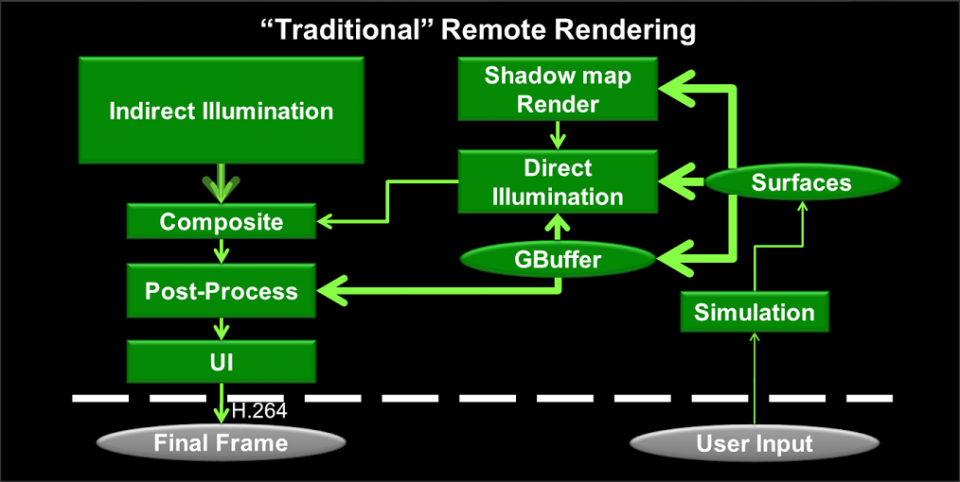

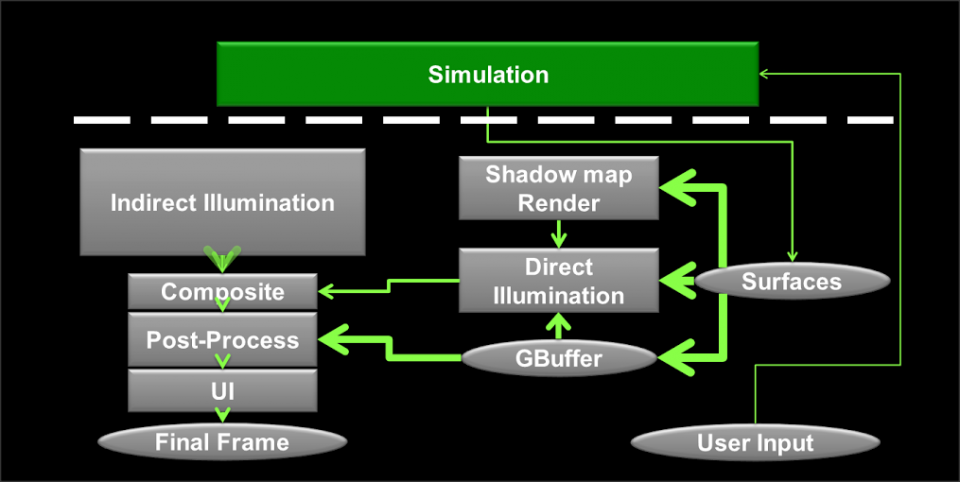

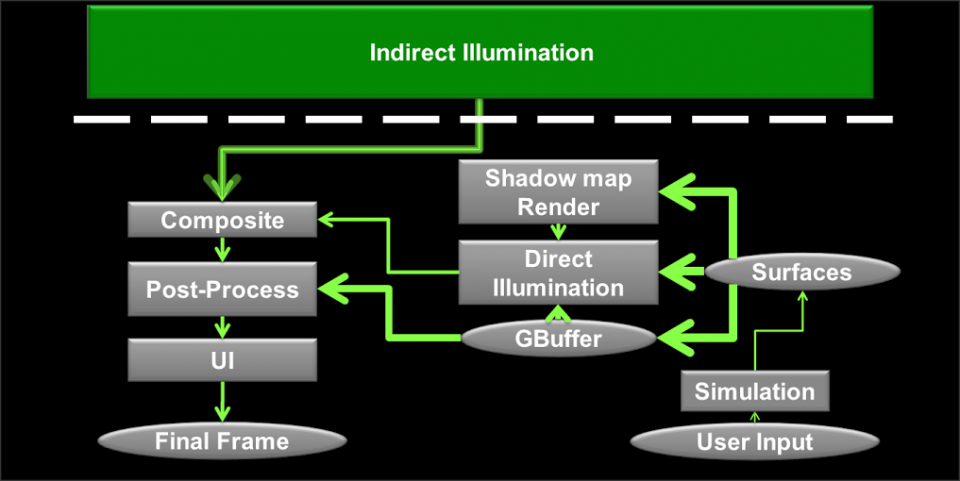

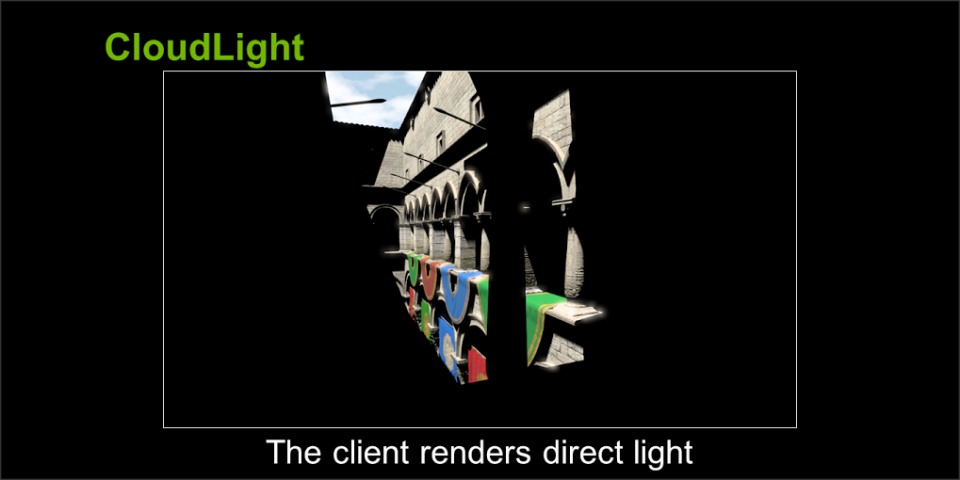

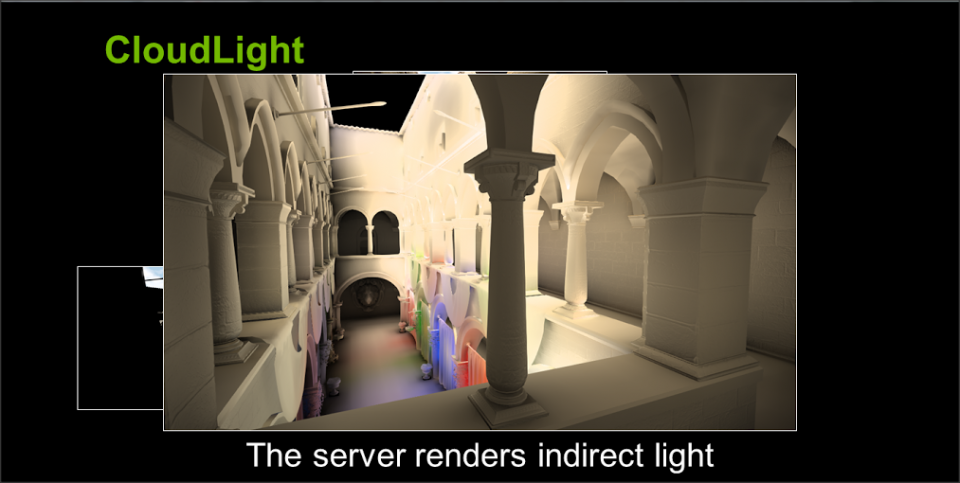

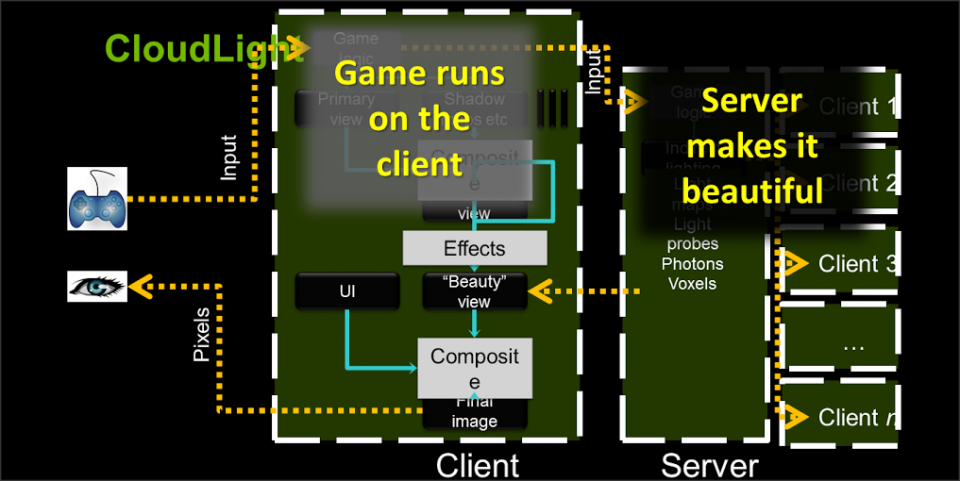

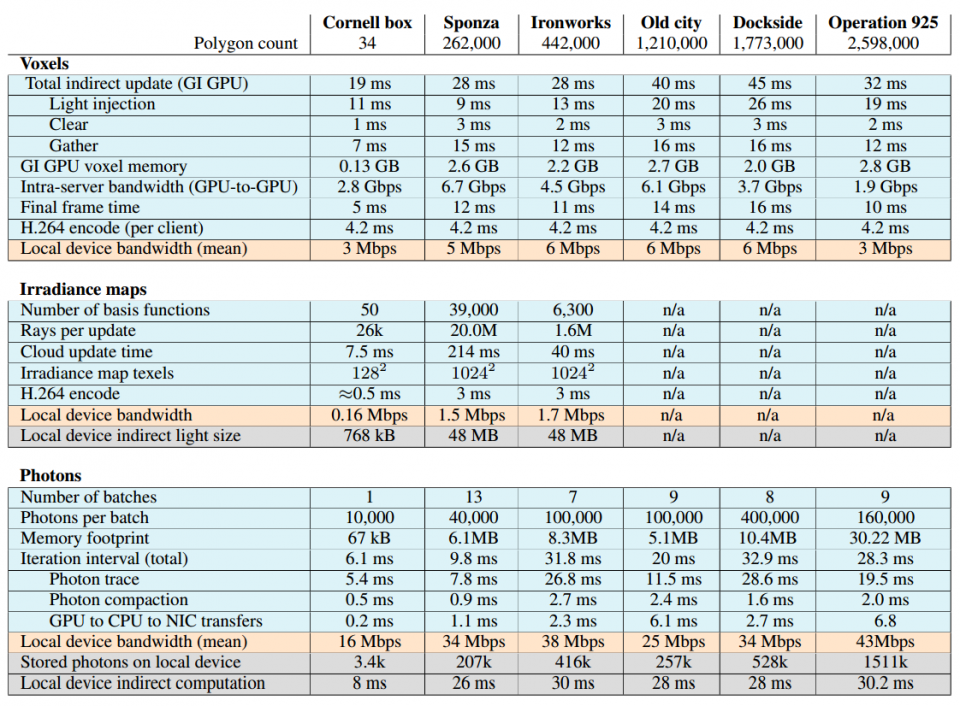

Looks like GAF is picking up on my slides...Maybe someone should tell those GAF "experts" Azure doesn't need a GPU to do indirect lighting like this. The Dual Xeons in the Azure servers are fully capable of processing, streaming, and rendering even without GPUs. You can use it as a render farm just fine and MS doesn't actually have to add GPUs to their servers.

In fact, forget a simple line of code like indirect lighting...

LOS ANGELES, California – Axceleon is actively demonstrating the Microsoft Azure integrated workflow rendering process and render farms to customers and prospects.

Axceleon has released CloudFuzion for Azure and is showing existing Media & Entertainment customers and prospects how easy it is to integrate existing studio workflows into a cloud based render farm on Microsoft Azure with no impact on the animator or artist. CloudFuzion is integrated with applications such as Autodesk Maya, 3dsMax, Softimage, Adobe After Effects and allows launching of image renders from the application directly to an Azure render farm anywhere in the world. CloudFuzion will move the scene, including any attributes or references, from the studio data repository to the Azure render farm and in turn will move the resulting rendered images back to the studio data repository as part of an automated workflow. The animator or artist is oblivious as to where the images are being processed or rendered.

http://www.cloudfuzion.com/press/Axceleon_Unveils_Microsoft_Render_Farms.html

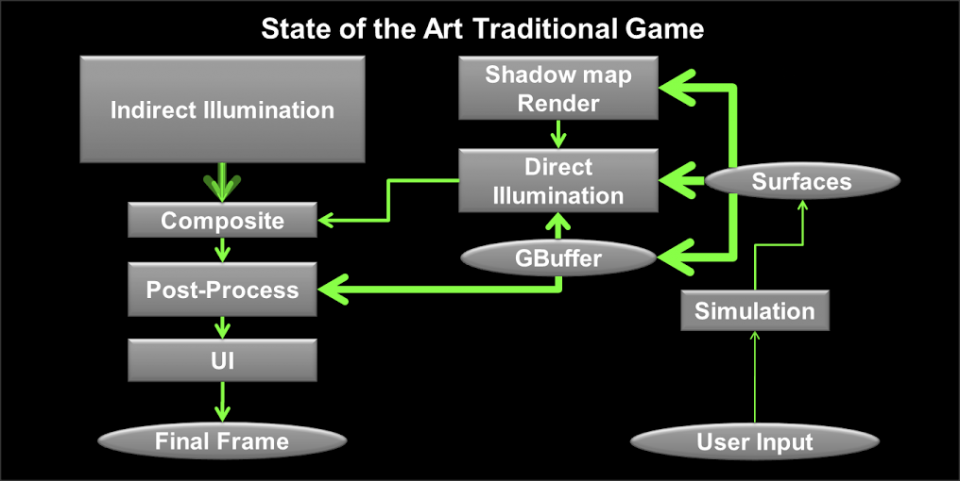

You can run an entire 3D animation program on Azure faster than you can on an Alien laptop. Rendering, ray-tracing, everything and stream it down just like you can on a a traditional cloud service.

You can run an entire 3D animation program. Rendering, ray-tracing, everything and stream it down just like you can on a a traditional cloud service. Also, Octane Cloud Renderer which can also run on Azure and can easily outperforms local hardware like this Alienware laptop trying to do the same. In this case Autodesk is running entirely on the cloud, so it's running the entire application, doing the processing, rendering, etc, and just streaming back the results. More like your typical streaming cloud service.

So no, for those of you wondering, MS doesn't need to add GPUs to their servers. The Xeons will be just fine and offer more than enough rendering power for most of your games, especially for partial code processing.

You can run any code on a CPU. Obviously some of it is better suited for GPUs, but CPUs have traditionally been used for developing ray-tracing engines. Only recently has there been enough improvements to get them running on GPUs and in this case, Nvidia's optimizing their code for GPUs because it's what they do. Their GRID is made up of Kepler GPUs. But you can also optimize it to run on CPU servers like Azure too and I'm sure MS is already doing this. Don't need to change the chips, change the code.

CPUs are actually preferred for complex code. GPUs are fast, but dumb, and better suited for simpler code that benefits from parallel processing.

Log in to comment