I noticed while on vacation that im still on outy of the country GPUs were still releasing in the US. I was sort of expecting some GPUs to hit the shelves soon since hardware websites like Tom'shardware and overclockers.net were reporting new architechtures and really juicy rumors. I was kind of exited.

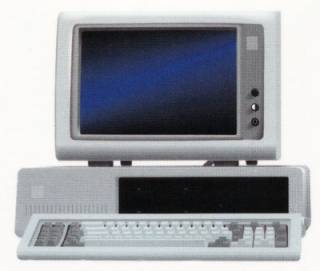

PC

Platform »

The PC (Personal Computer) is a highly configurable and upgradable gaming platform that, among home systems, sports the widest variety of control methods, largest library of games, and cutting edge graphics and sound capabilities.

HD4890 and GTX275: Seriously?

"Well, it's not like there's going to be a major hardware revolution every 3 months. Give it some time. When the gtx 350 is released maybe we'll see some fireworks. "yeah I guess I realize that the enginner's need sometime TO think...

Yeah I saw a comparison of the two on Extremetech the other day, not so impressed with the value vs. quality factor of the cards. I'm running a 9800GT currently (I know, but it was cheap) and don't plan on upgrading until the 350 comes out.

see there you go, 9800GT's, 9800GTX in SLI, im sure you guys have no complain running your games at this point in time right? COMPLETE FILLER CARDS!!!

Why would anyone cry for more frequent hardware overhauls as opposed to simply have more good looking games that are optimised and work well with what's out right now, and decently with what was out two or three years ago? We don't need another Crysis, we need developers to actually fully use what they have to work with. Look @ what consoles are pushing in comparison to their specs and tell me you wouldn't want to see PC developers do an equally good work, and instead you're content and happy to spend more money every year. Rich or not, happy to throw all the money of the world to your hobby or not, you should wish to get what the money's worth anyway, and game devs don't put up. Not that I don't buy lesser looking games, but it's a matter of context. I won't expect an indie 2-man team to put out the next Crysis, but the big publishers are another matter, and need to step up their game. Not just visually of course. On the other hand, dev costs are increasing all the time and we're heading for the tougher end of an economic crisis so lower budget games may be frequent. I'm okay with it, as long as they're fun and aren't sold for 50 euros. We really shouldn't wish for games that don't run on our setups to start appearing already just to get new cards out, you know.

For the record, I own a GTX 285 and I do hope it will last quite a while. My X800 XL would probably still be used if it hadn't broken down. I'm glad the new cards released aren't that much better yet so thanks for that information, it made me a happy PC gamer.

"Why would anyone cry for more frequent hardware overhauls as opposed to simply have more good looking games that are optimised and work well with what's out right now, and decently with what was out two or three years ago? We don't need another Crysis, we need developers to actually fully use what they have to work with. Look @ what consoles are pushing in comparison to their specs and tell me you wouldn't want to see PC developers do an equally good work, and instead you're content and happy to spend more money every year. Rich or not, happy to throw all the money of the world to your hobby or not, you should wish to get what the money's worth anyway, and game devs don't put up. Not that I don't buy lesser looking games, but it's a matter of context. I won't expect an indie 2-man team to put out the next Crysis, but the big publishers are another matter, and need to step up their game. Not just visually of course. On the other hand, dev costs are increasing all the time and we're heading for the tougher end of an economic crisis so lower budget games may be frequent. I'm okay with it, as long as they're fun and aren't sold for 50 euros. We really shouldn't wish for games that don't run on our setups to start appearing already just to get new cards out, you know.For the record, I own a GTX 285 and I do hope it will last quite a while. My X800 XL would probably still be used if it hadn't broken down. I'm glad the new cards released aren't that much better yet so thanks for that information, it made me a happy PC gamer."Gotta give it to ya, you make a good point. I think I was currious one day and asked in another forum what wouyld the 360 and PS3 score in 3DMarks. Then someone led me to the specs of a 360 and its somewhere along the lines of a 7300GT and a lesser tri-core processor. So yes I agree this is quite sad thta with these specs the developers are pumping out visuals that are almost on par with a PC's. Yes, if delevopment was centered around one such architecture long enough for deleopers to find its max potential tha would be stupendous but now tht brings to my mind, what would that do to GPU manufacturers? is nVIDIA just going to sit there and pumpout drivers the rest of 5 years while developers stare at a GPU thinking what else they could do with it? I mean this would be phenomenal to the end consumer because we PC gamers would wreak the benefits of our potential and posibly leave consoles in the dust.

"i may be biased, but based on all the reviews i'm seeing, the Hd4890 is a card worth investing in. "I dont know about you bro but I jsut dont see a gain of 5 frames in crysis, 10 frames in Far Cry 2, 15 frames in Left4Dead and a 1000 point gain in 3DMark Vantage worth $250.

It seems to me that there hasn't been a major new graphics evolution since the 8800 series came out. I think this generation of games and the slowing evolution of PC game graphics is the #1 cause (leading people to invest less in graphics cards). The average purchased graphics card price (that is the average price people buy a card at) dropped in recent years to about $60. The high end market is smaller than ever.

darkgoth678 said:

Then someone led me to the specs of a 360 and its somewhere along the lines of a 7300GT and a lesser tri-core processor.Whoever told you that was an idiot. Seriously.

darkgoth678 said:well the 7300GT part kind of shoked me but i think the tri-core CPU is fair. They didn't evven say it was that exactly it was just "it resembles"Diamond said:Then someone led me to the specs of a 360 and its somewhere along the lines of a 7300GT and a lesser tri-core processor.Whoever told you that was an idiot. Seriously."

"It seems to me that there hasn't been a major new graphics evolution since the 8800 series came out. I think this generation of games and the slowing evolution of PC game graphics is the #1 cause (leading people to invest less in graphics cards). The average purchased graphics card price (that is the average price people buy a card at) dropped in recent years to about $60. The high end market is smaller than ever.yeah im with ya though, the high end market is slower than ever. like Al3xan3r and you said, we just need for developers to give us a real reason to be in the high-end market.

well the 7300GT part kind of shoked me but i think the tri-core CPU is fair. They didn't evven say it was that exactly it was just "it resemblesLesser tri-core is more or less right. I don't think any 360 game has been CPU limited yet though, and games really shouldn't be until we get some drastically better physics and AI. Was there even a end-user PC triple or quad core when the 360 came out? You wouldn't want to run intensive CPU-based programs on a 360 like video encoding or compression. 7300GT is way way way off though. If he said 7800GT I may have let that slide, even though I think that's a little weak too. 8800GT might be praising it more than it deserves. It's not technically as good as a 8800GT in most ways, but with typical console optmization ability it can surpass a 8800GT sometimes. Some poorly ported games will perform worse than a 6800GT, so if the only 360 game you played was Quake 4, I could see you thinking a 7300GT was better.

yeah im with ya though, the high end market is slower than ever. like Al3xan3r and you said, we just need for developers to give us a real reason to be in the high-end market.

I think developers should let off of high end graphics for now. It's just hiking up costs, and a lot of companies are struggling as it is. I think for now the high end graphics market is absolutely dead.

Meh, games are already running @ 30 fps on the consoles, that shows they're limited actually. If they had performance room left, why not lock them @ a higher rate? if not 60 then 40. Anything would look at least a little smoother. Of course, there can be room for further optimization as they get more experienced and learn new tricks so things WILL improve. Just remains to be seen by how much, with the already familiar architecture there may not be a leap as massive as say, early days of the PS1 or 2 vs their final days.

"Meh, games are already running @ 30 fps on the consoles, that shows they're limited actually. If they had performance room left, why not lock them @ a higher rate? if not 60 then 40. Anything would look at least a little smoother. Of course, there can be room for further optimization as they get more experienced and learn new tricks so things WILL improve. Just remains to be seen by how much, with the already familiar architecture there may not be a leap as massive as say, early days of the PS1 or 2 vs their final days."Not CPU limited, GPU limited. That's what it comes down to in almost all cases. Furthermore, most games are locked at 30fps. When you lock a FPS at 30, that can mean the game is actually capable of running at 40fps, 60fps, or even more. If you're staring at a wall in COD4, you're going to be seeing 60fps, while the game might actually be capable of churning out more than 120fps at that moment. 95% of the time, a developer would rather lock at 30fps than have a FPS that's going from ~28fps to ~70fps. edit : locking at 40hz doesn't make sense because HDTVs and NTSC refresh at 60Hz, and half of 60Hz is 30Hz. Locking at 40Hz wouldn't be optimal. I know it would cause havoc with the interlacing on my HDCRT.

I guess developers could always have more physics reacting objects if the 360 had more CPU power, but in most games limit physics by design anyways.

I never implied they run @ a constant speed of 30 fps because the machine can't push more at any one time, like your looking @ a wall situation. It would be impossible to balance performance in that way without locking it so yes, I'm aware of that. Clearly though, if they lock it @ 30 then they think it would drop to that too often to make it a smooth experience, which is why they chose 30 and not 60, or 45. None of that would be held at a smooth and consistent... constant... as much as 30.

None of that would be held at a smooth and consistent... constant... as much as 30.They don't lock at 50fps or 40fps for the same reason they don't lock at 20fps or 27fps or any other odd number. There's probably plenty of 360 games they could lock at 40 or 50, but because of refresh rate standardization, they don't. But anyways, my point was the 360's CPU may not be fantastic, but I don't think it's limiting any games.

Meh, I don't see any reason to not lock it at a smoother frame rate if the machine can handle it throughout. And man if they took it even less than 30 fps that'd not be much of a next gen... 25 looked horrible to me back in the Saturn days when Panzer Dragoon (The first, not Zwei or Saga) used it. Ouch.

Please Log In to post.

This edit will also create new pages on Giant Bomb for:

Beware, you are proposing to add brand new pages to the wiki along with your edits. Make sure this is what you intended. This will likely increase the time it takes for your changes to go live.Comment and Save

Until you earn 1000 points all your submissions need to be vetted by other Giant Bomb users. This process takes no more than a few hours and we'll send you an email once approved.

Log in to comment