I would probably go with a 7970 or 680 today, their younger siblings are totally fine as well.

Dont worry about Dx12, It seems it wont be coming to windows 7, and alot of people still think the metro ui of win 8 is demented. ( even though the OS itself has an awesome taskmanager and a slight performance increase )

Similarly to developers not making vista exclusives.

You should probably stay away from multi-gpu solutions until you are informed and experienced enough in hardware to make that decision without asking anyone else.

@corvak

:

7970 GHz edition is pretty much just a 7970 with a new bios, like you said,

ive been running my own 7970 @ 1150 MHz since launchday on reference cooling. and thats without increasing the voltage, which means the temp difference is minimal.

most if not all 7970 will do 1 GHz with ease.

@mirado:

Yeah 7970's have a backup bios, but even if it didnt, you could just do it like the olden days, dump in another graphics card to give you eyes to be able to write in the flash commands to flash back to the stock bios, or if you are really hardcore, just write em in blind.

As long as the card didnt fry from the bad bios, that'll work, and if it did fry, the backup read only bios wouldn't do much good anyway.

@alistercat: Nvidia and AMD arn't reacting to the new consoles, they are constantly pushing the envelope, there isn't any higher chance of a huge leap or new tech getting discovered compared to any other year in the business.

After all, alot of the ps4 is AMD chips, which are PC parts that have been R&D over alot of years, and then ajusted for consoles, its not like AMD is going to be blindsided by its own tech.

@mr402:

well, some situations in BF3 makes my 7970 seem lacking even at 1080P, but that's just cause i want it silky smooth, everyone has their own thresholds.

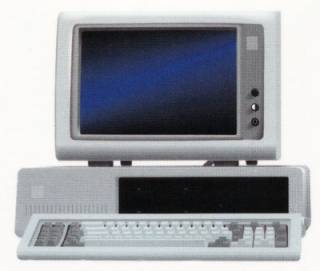

@deathstriker666: do you have any actual spec on the upcoming 8000 series, or are you using it as a typical example, all ive heard about 8000 series so far is that there will only be an oem series initially, that is a complete rebrand of the 7000 series, but since its oem, it wont be sold at retail at all, only as part of branded computers.

@myketuna:

do you really have to read benchmarks to know that your card is too weak?

what about playing an actual game and just feeling that it is stuttering and inadequate?

If you still think games run great, there is no need to upgrade, just cause a benchmark said otherwise.

Log in to comment