I built the murder slingshot

By bushpusherr 17 Comments

(*Full disclosure: this blog will discuss elements that I built for the purposes of my twitch stream. I don't want this to come off as though I'm sneakily trying to self promote because I really just want to geek out for a bit about what I built, so let's get this out of the way up front. Yes, of course it's cool if people check my stuff out if they feel it speaks to their interests, but no, that isn't the focus of this blog. Let's get into it!*)

For whatever reason, this Bombcast segment has lingered in back of my mind ever since I heard it in 2012.

In the last few months I finally began putting real work into an idea I had to combine my software development experience & education with my interests in video editing & production to develop a custom application for the purposes of making a fun stream to fool around with with my friends. At perhaps the exact moment I decided that I wanted to do something silly to visually represent people getting banned from my channel, this Bombcast clip sprinted to the front my mind and I didn't even consider doing anything else. I wanted to launch my enemies into the sun with a slingshot.

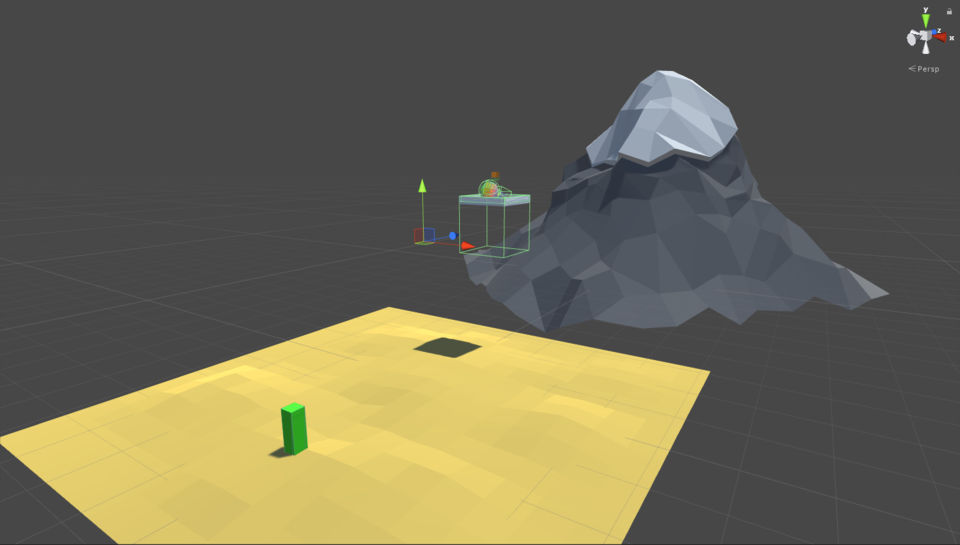

I immediately began experimenting (in Unity, where I developed all of this) with slingshot physics in a test environment where I launched a low-poly avatar into a mountain.

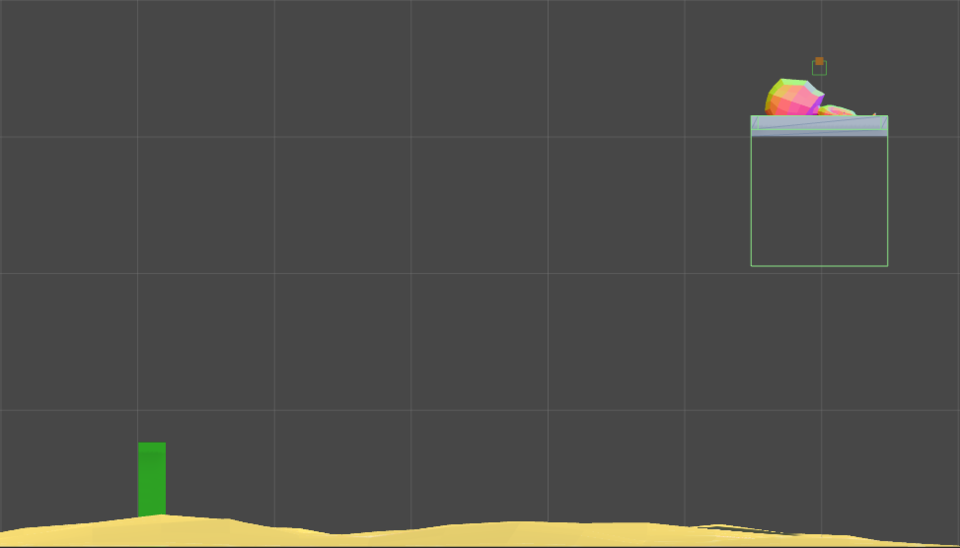

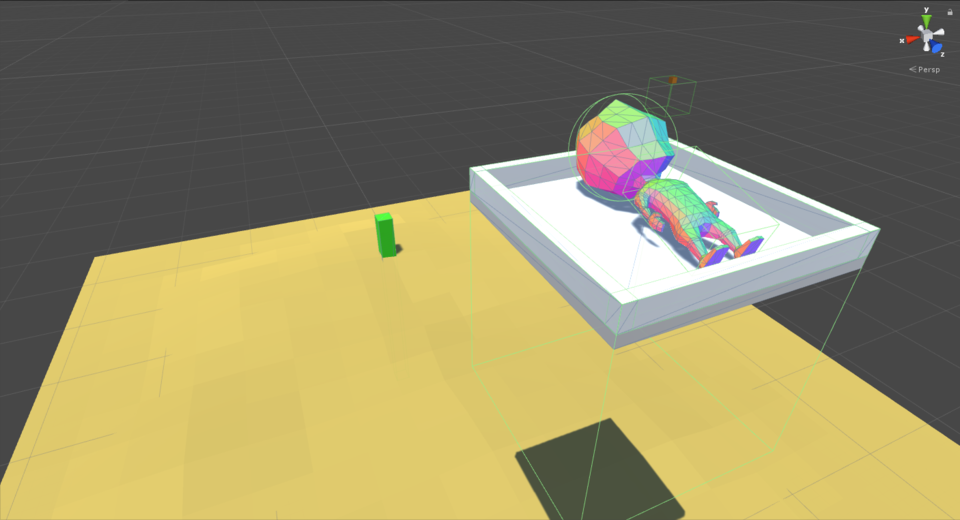

I eventually settled on a solution where an invisible anchor point in space (represented here by the brown cube) would be connected to the center of the launch chassis (the white "box" that our avatar resets in) with a single spring joint. The function of a spring joint is to continuously apply a specific amount of force to attempt to return the connected body back to it's original distance relative to the anchor it's connected to. So, in this case, our launch chassis will fight against gravity to stay about a half-meter below our invisible anchor point. If our spring force is stronger than gravity, the chassis will simply hang in the air (perhaps swaying slightly due to the position and mass of the avatar resting on the chassis).

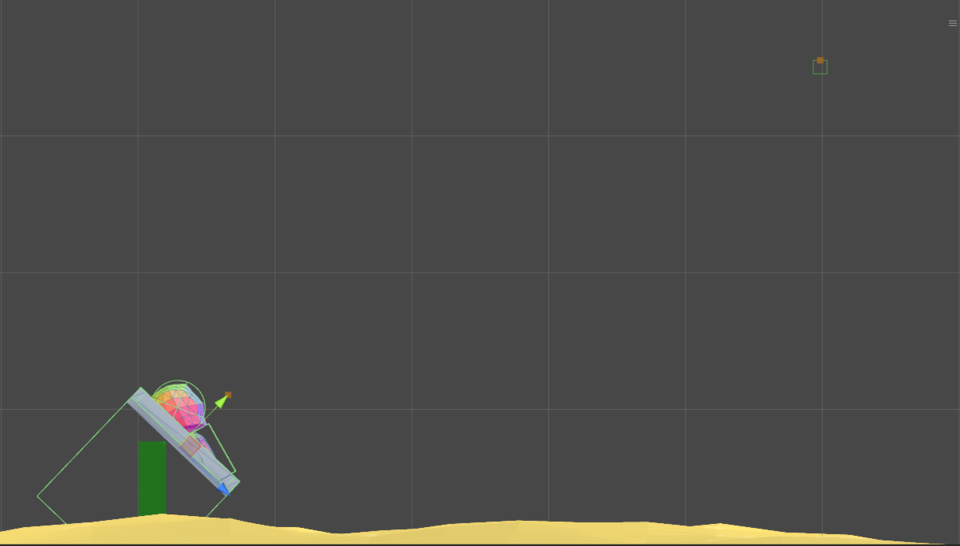

Given this information, you have perhaps already deduced how the actual slingshot launch works. By disabling the physical components of these objects (disabling the gravitational pull, stopping the spring from applying it's force, etc), I can freely re-position ("pull back") the launch chassis towards the mounting point and leave it there until I'm ready to fire.

By simply re-enabling the physical components, the spring will intuitively pull the chassis towards the anchor point. The force applied will push the chassis through the anchor point and the momentum will carry it beyond. Once that occurs, the spring force will eventually counteract that forward momentum and begin pulling the chassis back again from the other direction. Up to this point, gravity and the upward force on the chassis were the only things keeping our avatar connected to our chassis as it thrust towards the anchor point. But without a spring to pull it back, our avatar is now free to keep it's forward momentum as the chassis below it reverses course.

You may have noticed the large, hollow, green outline of a cube beneath our chassis. This is a collision volume that only interacts with our avatar, and its size is such that it allows us to use higher spring forces to pull the chassis and have the avatar maintain it's position on top of it without clipping through the thin chassis floor. There is a lot more I could say about the update rates of physics calculations vs transform positions, the nature of rigid bodies, etc, to explain why this large collision volume is a solution here, but that's a bit more technical than I'd like to get.

Now that I had that part solved to my satisfaction, I started thinking about the aesthetics of the sun. Of course, a pretty specific image quickly came to mind. I'm guessing @vinny would have probably thought of the same scene. Can you name a more iconic star in video games than the one seen from the view of the Illusive Man's room in Mass Effect 2 & 3?

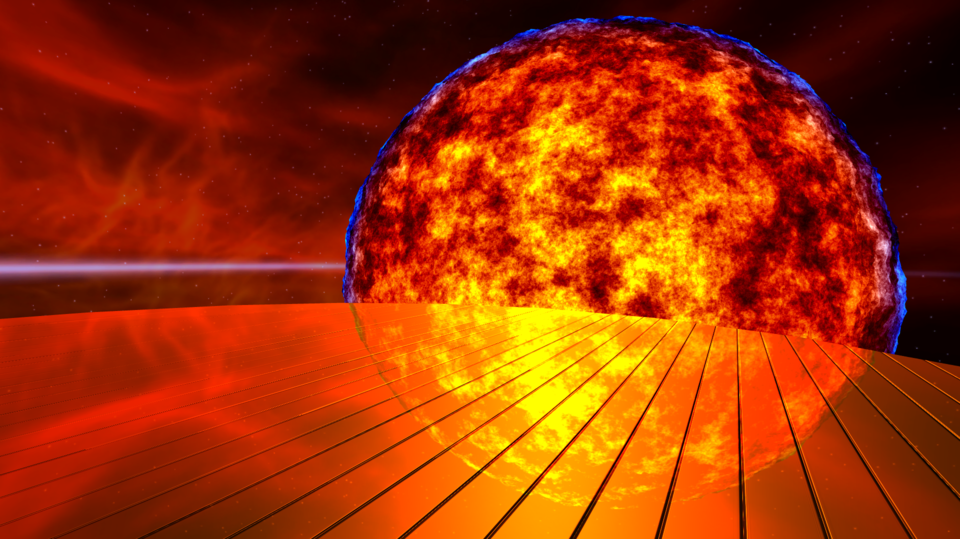

I'll show off where this inspiration eventually took me before getting into the details:

To get the simpler stuff out of the way up front: the background is a straight forward skybox, and the floor is the top of a large cylinder (default Unity model) that has a shaded mirror reflection script & material applied with a basic tiled normal map to achieve the grooves in the floor. The bright lighting on the floor isn't actually coming from the sun itself, it's simply a separate directional light shining onto it.

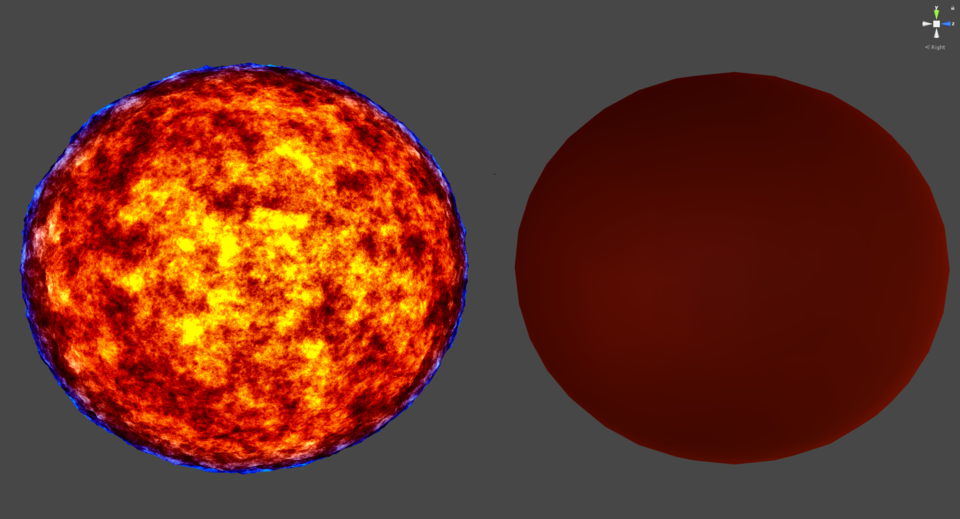

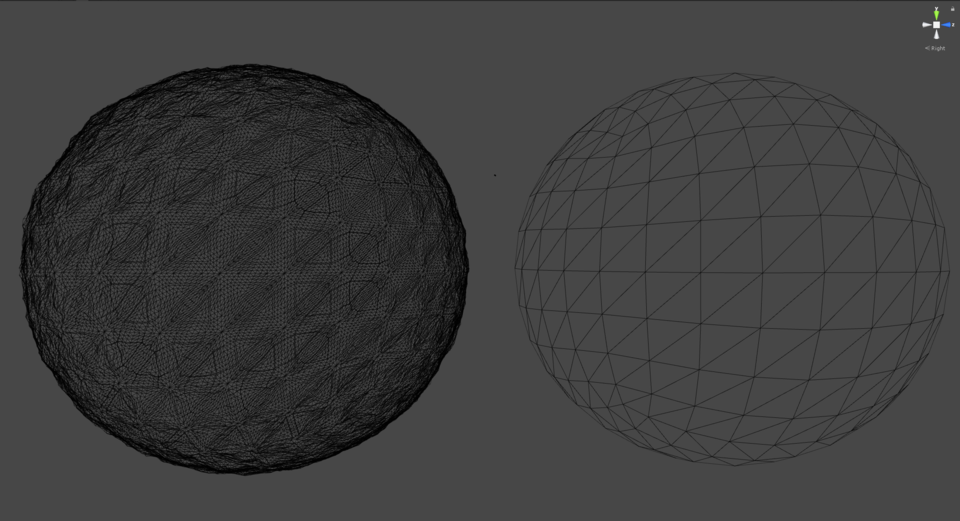

The real fun part here is the star, of course. I just used the Unity standard sphere model as the mesh, and everything else is done in a shader/material that I wrote. I'm fortunate enough to have a CS masters focusing on real-time graphics, so this part was especially exciting for me to work on. Here is a side by side difference of the final sun vs. standard sphere to show exactly how much the shader is changing:

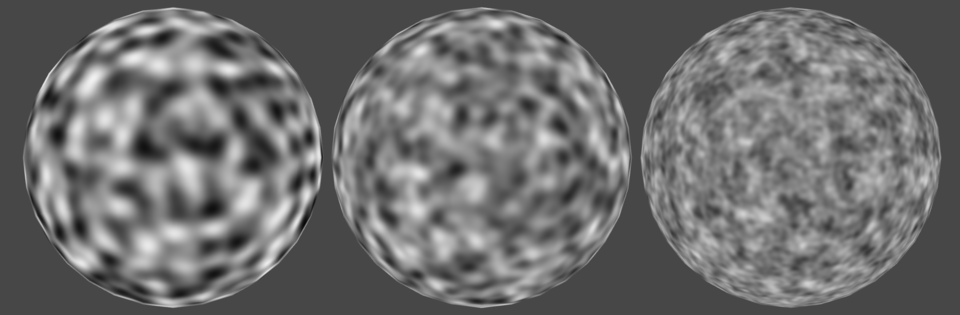

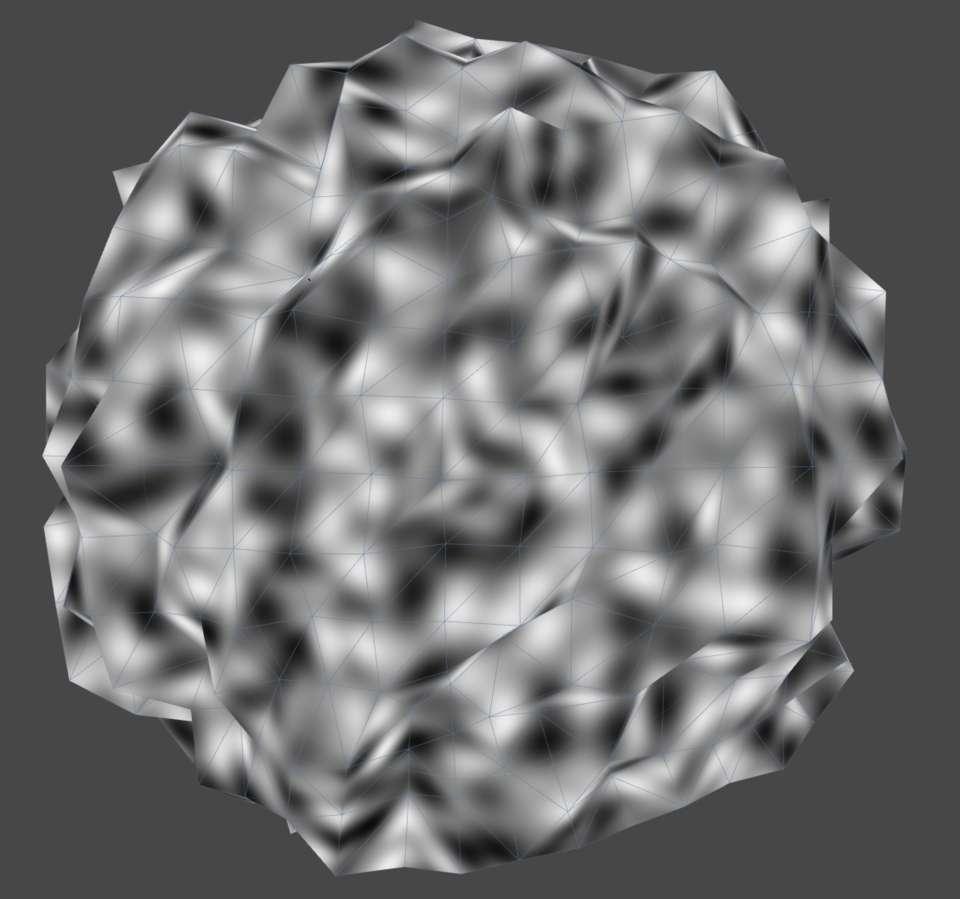

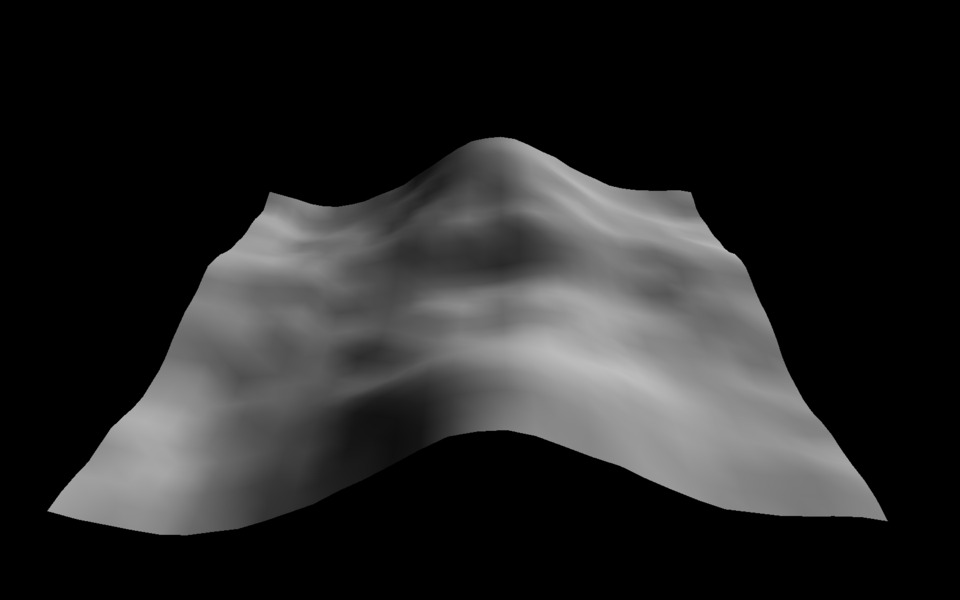

The primary tool at play here is the use of something called Perlin noise, which is a mathematical function developed by Ken Perlin that generates collections of random values that smoothly vary between 0 (black) and 1 (white). Multiple sets of these values can be generated with differing frequencies, wavelengths, and other modifications and then combined to create some really intricate noise. You can also modify one or more of these parameters by time to animate this noise. Here is what the sun would look like if I strictly output the value of my Perlin noise implementation and didn't colorize it:

This material is using 12 "layers" of random values, each with slightly different parameters, that combine to form this smoky appearance. You can adjust how much impact each of these layers has on the final product, as well. Here is what this would look like with using 1, 2, and 4 layers respectively:

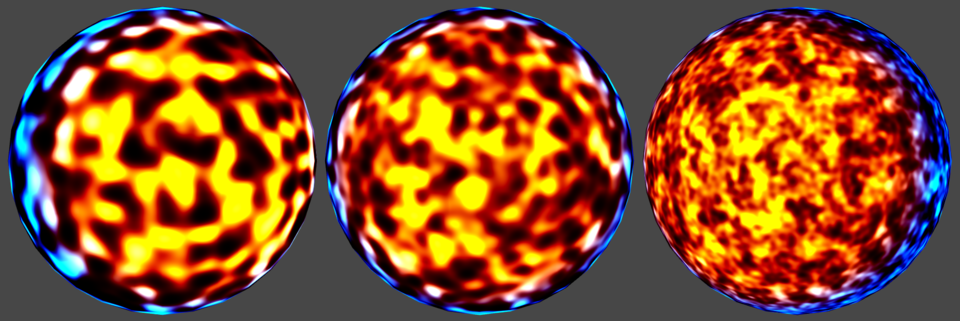

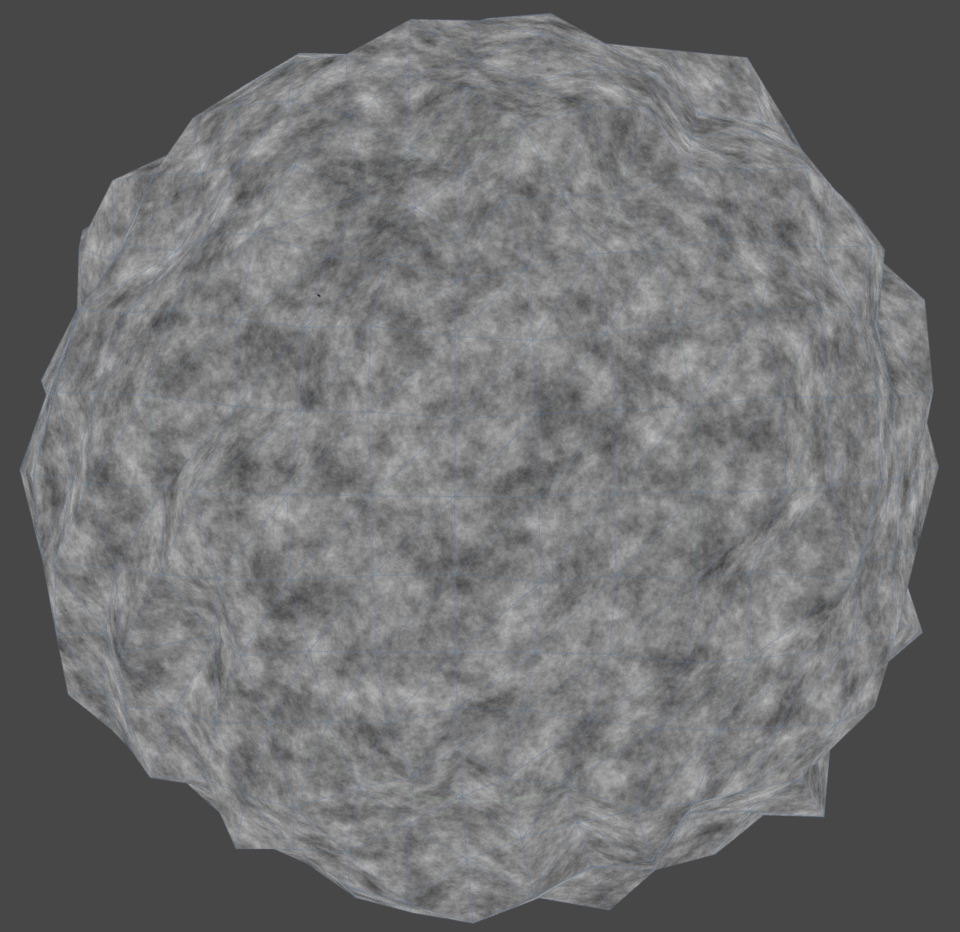

I then took the output of this Perlin function and put it through a variety of power functions (squared/cubed/etc) with some other clamping & fine adjustments involved, and then multiplied those differing results by various colors. There is a *bit* more going on there, but again, probably too technical. If that colorization were applied to the previous image, we'd have this:

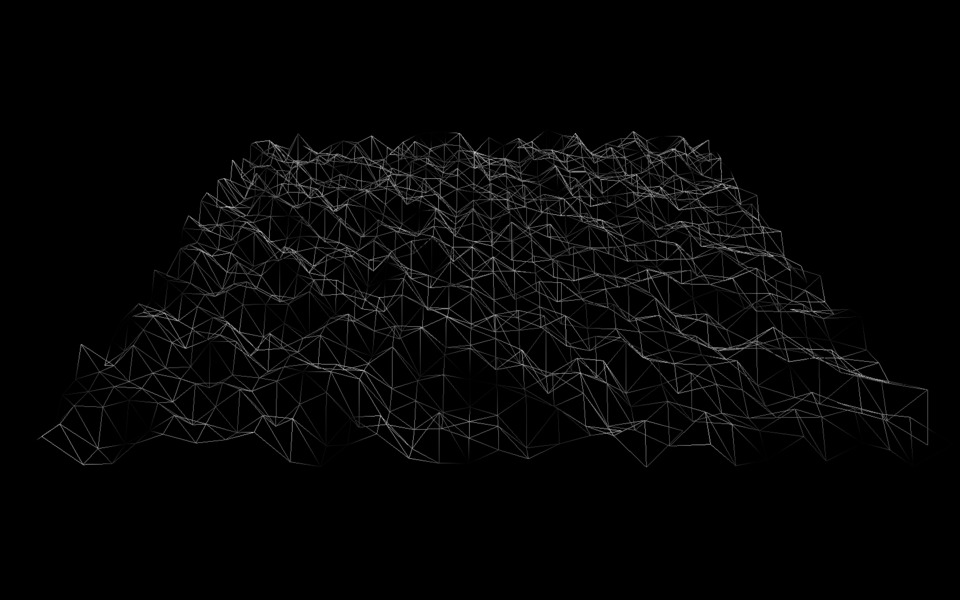

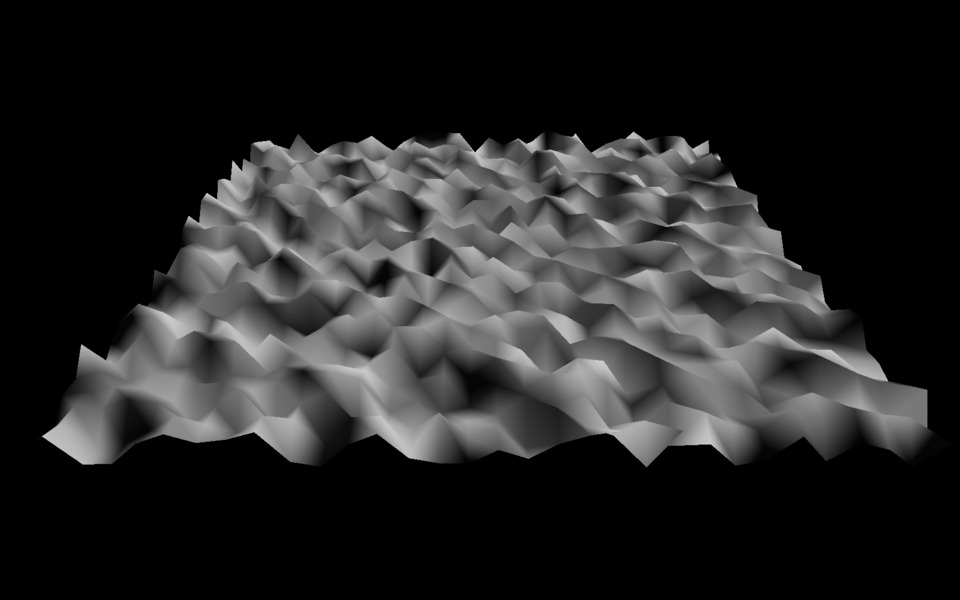

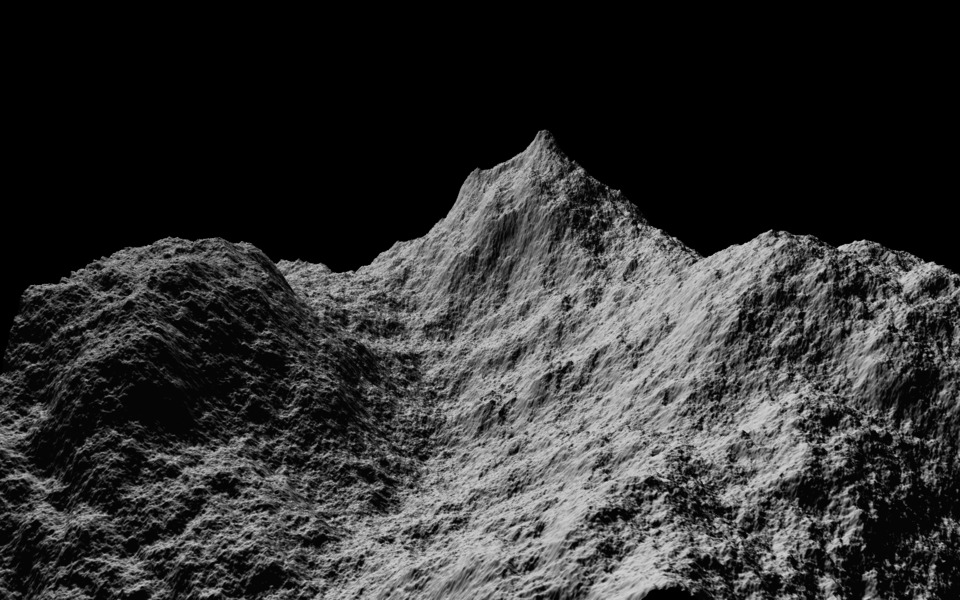

The last major component to this (which will really show through primarily in the animated version) is that I am also slightly displacing the vertices of the mesh by this noise value as well. Basically, I'm moving the vertices of the mesh a little bit closer or further away from the object's center based on the [0,1] output from the noise function. This effectively just makes the sphere bumpy/jagged. To show an extreme example to illustrate the concept, this is what a pretty high displacement intensity would look like with a single layer of noise:

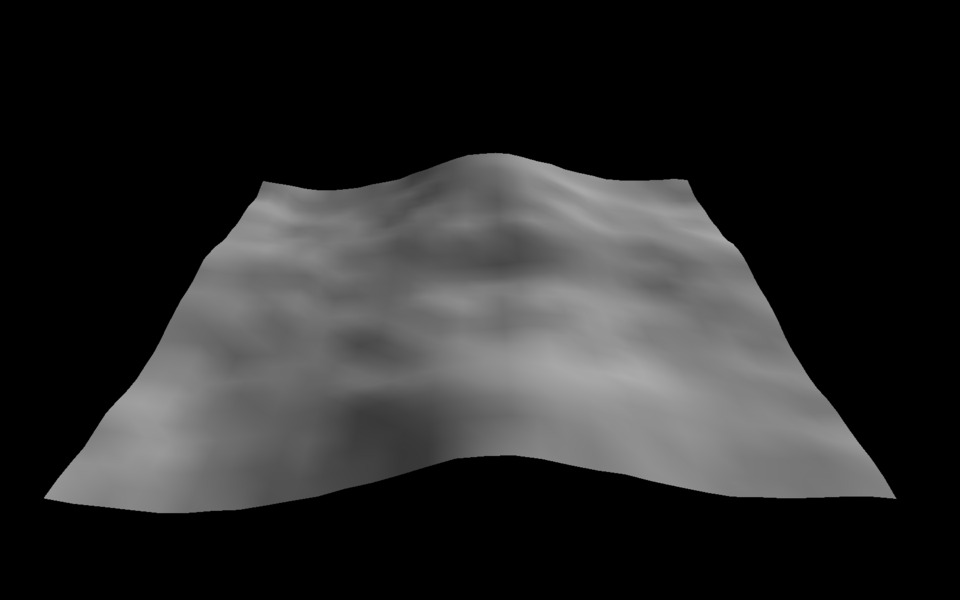

Another important characteristic of this displacement is that, even with our 12 layers of noise that I ended up with, we are still only displacing as many vertices as are actually there. Here is the same displacement but with the updated noise values:

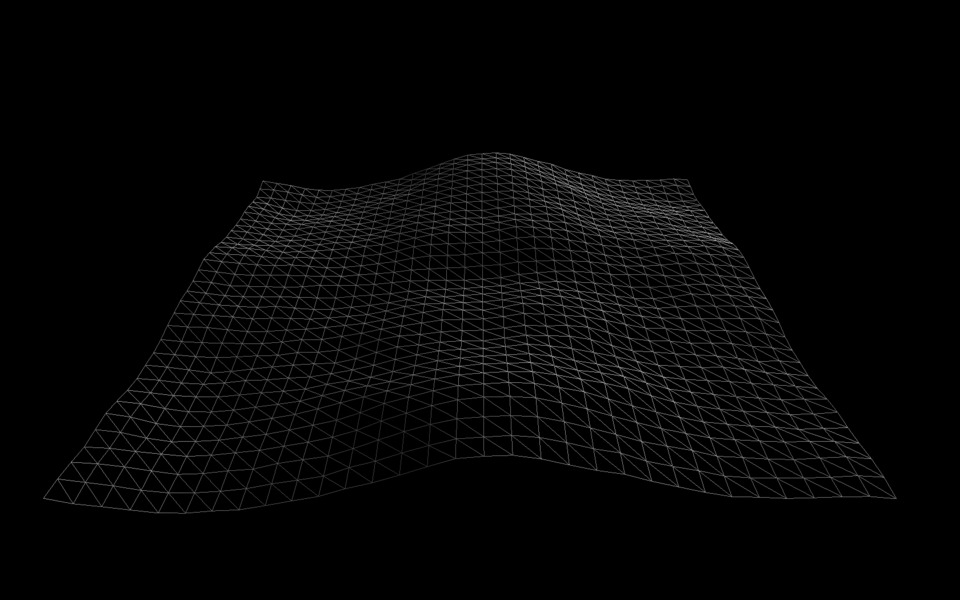

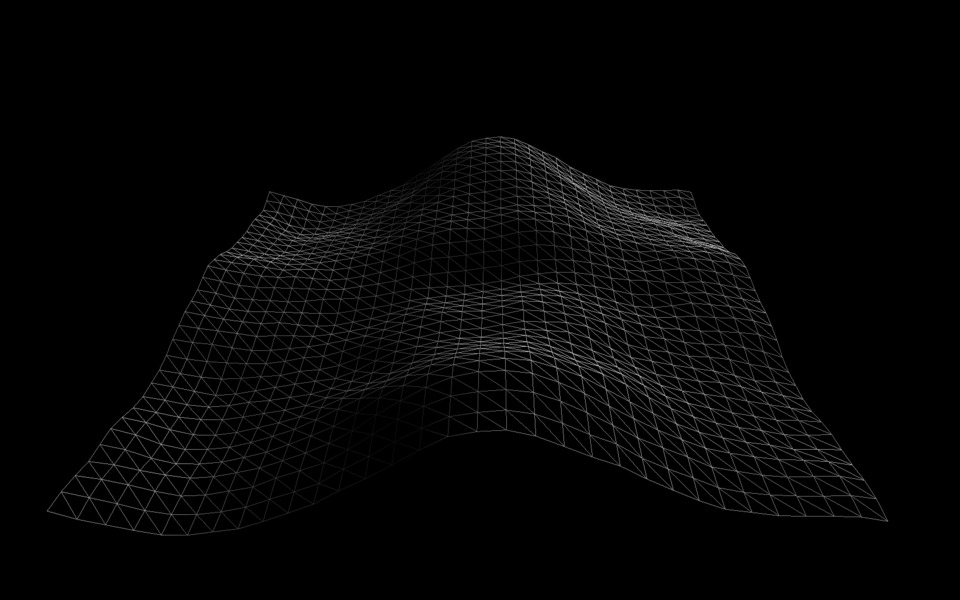

The default Unity sphere is a pretty simple mesh without many vertices to work with. In order to get less of a jagged displacement, we can let the graphics card generate more vertices for us that get placed in between the existing ones in a process called tessellation. Here is what the wire frame of our properly displaced and tessellated sphere looks like compared to the starting model:

And with that, I think it is finally time to show you what this thing actually looks like in action. Unfortunately (but actually, fortunately?) I didn't have anyone behaving badly enough to warrant a ban during my first weekend with the stream for the Blackout beta, so I had to do some symbolic launches of people who were being jerks from within the game itself (people telling others to kill themselves, shouting racist/homophobic garbage, etc). My process was setup to integrate the name of the person being banned, but defaults to "Unknown" if that ban queue is empty. I already have improvements planned to let me add these names manually for such occasions, but I was too excited to talk about this to wait and get more video haha.

Wherever the power meter is stopped, as if this shit were Mario Golf, determines the force of the spring that pulls the launch chassis. I made sure to adjust it so that low-power shots were still pretty fun, lol. Given that I showed how the slingshot was implemented you probably already guessed, but the slingshot wires and posts they are connected to are all purely cosmetic.

Political opponents beware, the Gerstmann 2020 platform is ready for action.

Log in to comment