I'm happy with it. I'm on a 780 and at mostly high settings with FXAA, even with the hair setting on, get a steady 60fps. 1080p of course. It does drop in cutscenes but that seems to be a common thing for most people. I'm sure it could look much better but damn, still a gorgeous looking game.

Rise of the Tomb Raider

Game » consists of 7 releases. Released Nov 10, 2015

A follow up to 2013's Tomb Raider reboot. After the events of the previous game, Lara spends one year searching to explain what she saw. Her quest to explain immortality leads her to Siberia, home of a mythical city known as Kitezh.

How is the performance of Rise of the Tomb Raider (PC)?

@shivoa: That's a really interesting idea. The only way to really test it is if someone cracks Denuvo on one of them and runs the game with a day one patch though.

Played about an hour now so have a clearer idea of performance - Still excellent.

Man, this is a good looking game. First time I've seen all my GPU's 6GB VRAM in constant use too.

Does anyone know how Tomb Raider handles SLI? I have two 980s, I'm sure I will be fine with a single card but hey...you know.

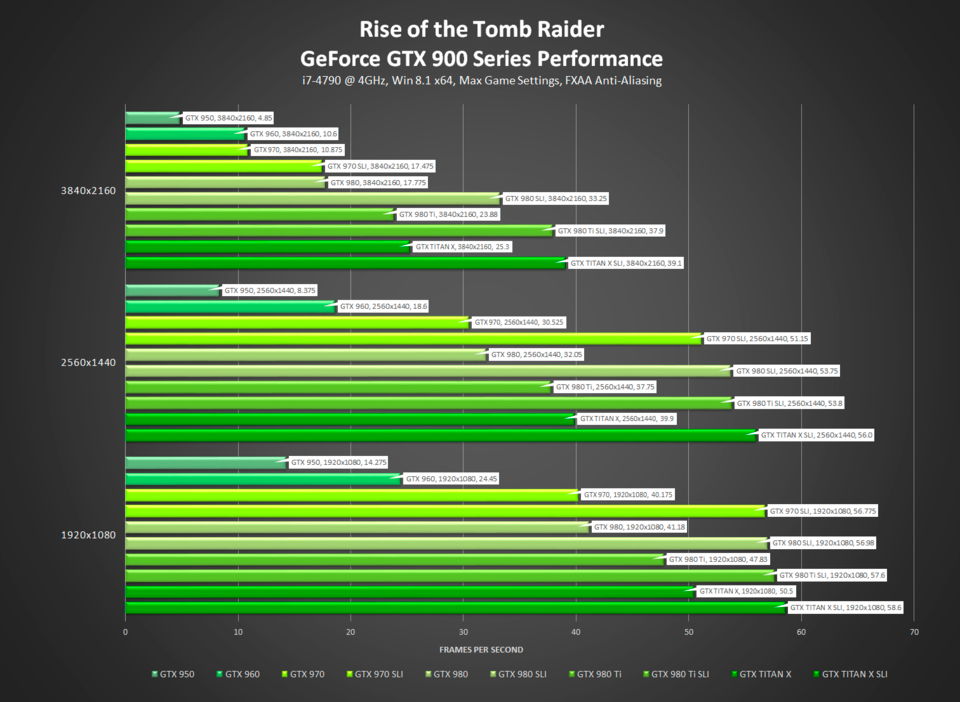

Well according to Nvidia SLI performs 50% better than singlecard in Tomb Raider. 980, 980ti and Titan X all maxes out at about the same in SLI aswell which to me looks like the CPU or something else other than the videocard is the bottleneck.

edit: The actual performance figures dont look right though as they dont even get 60 FPS in 1080p with dual Titan X ;) Maybe im reading it wrong.

@rethla: The interesting part of that guide is that outside of AA (which is to be expected) there doesn't really appear to be any major performance hogs, all of the gains/losses are very incremental. To see any significant changes in frame rate you'd need to start knocking down every thing across the board.

The perf is kinda all over the place. I'm playing it on 4 titan X. Sometimes it is well over 100fps and sometimes it is crap. It isn't because the game is demanding (it isn't) - even when running super fast GPU usage isn't maxed out. When the perf is bad GPU usage is VERY low. My gut tells me some effect is breaking something.

FPS will radically change with a turn of the camera, it is very strange. From like 110 to 50.

Also, this is the only game I've played (when the issue wasn't a memory leak) that right away the game used over 7gigs of vram (and at some points went up to over 9gigs)

There is a very small FPS counter in the bottom right. whatever the snow effects are, it doesn't use the GPU (low gpu usage and no great fps)

@zurv: You must be severly CPU limited with your 4x Titan X cards. I tried that sequense with SSAA enabled which is effectivly rendering it at 4k resolution and im not getting the same choppiness and on avarage 10more FPS than you.

If by "snow effects" you mean the avalanche its just a pre rendered movie they play in the background and it looks really low res at resolutions above the intended 1080p, just like the cutscenes. Its a strange bug how it jitters around like that however.

4-way SLI is notoriously bad at scaling and providing consistent experiences, you'd probably get a more or less identical experience with 3-way and possibly a more consistent experience with 2-way be it with a lower peak framerate.

Case in point here is a scaling test PC Perspective did around the 980 launch - http://www.pcper.com/reviews/Graphics-Cards/NVIDIA-GTX-980-3-Way-and-4-Way-SLI-Performance

There might be room for this to improve with DX12 and Vulkan as GPU's at that point become effectively independently addressable processor but the proof will be in the pudding.

Does it look much better than the 2013 Tomb Raider? That was a great looking game. This is a lot more demanding so it better look much better.

I upgraded to a 270X for TR 2013 and a 280X for Dragon Age Inquisition. I am not upgrading again anytime soon. Got a 3570K at 4100mhz and 16GB RAM. I easily play TR2013 on ultra but seems you need a 970 or 390X at the very least to play the new game on ultra.

Seems badily optimized to me since a 280X has twice the shaders of a PS4 GPU and 2.6 times the shaders of a XONE GPU. Also my CPU is much more powerful than the laptop grade CPU in the consoles running at just 1.6 to 1.7ghz for PS4 and XONE.

When you get the game can anyone tell what settings in the new game is equivalent to ultra settings in TR2013 in terms of how it looks?

I'm playing on high settings and it looks great. One of the nicest looking games I've seen to date. They did a great job with the shadows and lighting. Much better than TR2013.

I'm running a stock 2500k sandy bridge, 8GB of RAM and a GTX 970. So far I'm getting pretty solid 60 fps, slightly dipping into the high 50's, at 1080p with everything turned to high, motion blur off, and the hairworks at the "on" position. It seems like the hairworks is what really kills frame rates along with some of the ultra-high effects turned on. This game is also surprisingly CPU-intensive as well, so that can account for some minor issues. But If it is anything like the last game, most of those issues will be solved after a few patches and driver updates. I remember TR2013 getting about a 30% performance boost just from patches and drivers alone last time, so I have no doubt this game will run fine once they work out a few kinks.

I've got a GTX760* and Rise (once you get into the first really big area) is really pushing where I'm happy with settings vs performance. I want those best quality shadows considering they're only a small perf loss. I'd really like better than FXAA as that aliasing on the trees etc is terrible at 1080p (TR2013 I played on the same hardware at 4K and that really made for a clean presentation) but there's no chance of me enabling SSAA or even mild DSR beyond 1080p30. Obviously (as the 24fps of the GTX960 @1080p nVidia notes above makes clear under max settings) I have to scale some settings back a bit but (as noted) a lot of them really don't make a major difference to perf so it's giving up the visuals for a very modest increase in framerate.

The medium textures vs high (which seems to be noted as being for 3GB of VRAM) doesn't actually give me the perf loss I'd expect for it thrashing the texture cache. But perf does seem to be rather unstable in general (it's just also unstable with medium textures). Which is a pain as I'd be happy with 1080p30 if it was 100% stable. But it seems like I can play the game and if it really chuggs then going into the menus to let it finish doing whatever it is doing (moving textures around? getting bogged down somewhere else?) and then unpausing again gets me back to 30fps solid. Which is real weird as a behaviour.

I'm ok with 1080p30 and good looking, it's just a bit of a shame that I was literally running this engine (a previous edition) with the 2013 assets on the same PC rendering four times as many pixels with less performance concerns. This looks better, I'm not sure it looks "literally less than a quarter of the performance" better. But I was already eyeing up new GPUs so I guess this is just another sign that it's getting around that time when I look at the upgrade budget or have to deal with cranking back settings or even lowering the res.

* Gah, just got to wait for the next gen of GPUs to land - at this point I'd be crazy to not get a FinFET part, hopefully with HBM2 or just mountains of GDDR5 to never get issues with which texture size to choose - although at this point textures are really diminishing returns if the engine has a decent texture streaming process to only load the mipmaps it needs; even with the albedo+roughness+reflective+normal+[insert some attribute here to help with something like subsurface scattering] texture loads of PBR. You only need massive textures for stuff the camera is real close to, games like Mordor and Tomb Raider both show very small gains outside of where the low-res normals mess with ambient occlusion etc effects when going to the highest res.

So I actually saw something new just now. The game had got itself so twisted not even exiting to the main menu fixed it.

I assume we all set our game up so the main menu screen is fps-capped. The studio apartment isn't a hard scene to render, your game should be hitting the fps-cap there and for me (because mid-range, old GPU and preferring a consistent 30Hz over a potential micro-stutter 60Hz when I know I'm picking settings for the game that will push it way beyond expecting a consistent 60fps anywhere) that's 30. Only I was in that first main area again, doing some exploration by the mine and the fps got bad. Real inconsistent. I tried idling in the menu, cycling textures down and back to get them to reload only the stuff it needed, and even lowering the res (nothing fixed it but then this is a game where I've noticed that 720p is not a lot faster than 1080p on my machine, which is weird but the same weird that nVidia's settings thing shows: changing setting really doesn't change the fps as much as you'd expect. Unless you turn shadows totally off, low res blurry is not that much slower than really sharp and that's about the only setting that radically changes your fps except maybe the hair and even then it's not like the last game where TressFX basically broke on any nVidia rig and halved the fps on every machine).

Anyway, 30fps went down to 8 for no good reason. Went into the menu and it didn't jump back to 30 (normally the map menu, as the game isn't rendered, is fps-capped) and was running at 11fps. Weird. Try poking settings and yep, no matter how I set the game, 8fps in-game. Exited out to the main menu and 11fps. The game just gave up. No matter how little work was required of it, 11fps or worse. No idea. Quitting to desktop and restarted and everything was fine.

So that's weird. GPU logs reported it was not stalling out the way Just Cause 3 had (Rise is heavy load in the game, low load in the map menu so the GPU definitely felt very little demand when in that 11fps map menu; clear as day in the graph of GPU load but JC3 showed the VRAM dropping to 0% and the GPU stalling while Rise showed none of that) but the end result was a game that runs well enough and then seems to get "infected" with a massive performance loss that doesn't care if the game is doing complex stuff or not. Almost a perfect description of the issue I had with JC3 (and lots reported on) and also what Destructoid reported on as one of their issues with a GPU that really should show no issues rendering this game at 60fps.

Starting to wonder if this is Squidix central tech going wrong; a driver bug (and yet, JC3 was more affected on AMD and having a single bug that breaks both some nVidia and AMD systems would be real weird); or maybe something in the DRM/Denuvo (which I suppose I'm also kinda writing down as "shared Squidix code").

Games crashing or even softlocking I'm not a stranger to but this weird recent spate of performance degradation but still technically is running stuff (last two AAA games I've brought at launch on PC: JC3 and Rise) is really weird. I guess you could put Batman in that bucket as that was also like this.

*Googles DRM status of Arkham Knight*

Ok, I don't want to sound like a conspiracy nut guys but that's another Demuvo protected game. And it appears that Lords of the Fallen used it and had a dev talk (now gone due to Twitch archiving policy) about a small perf cost at run-time (as in this isn't something that is run and then stops when auth is complete, which is what traditional DRM does, but rather is always running and so able to go wrong and crater performance if it has a bug in it).

Has a new generation of DRM-like anti-piracy measures arrived that's basically ruining PC ports (at least for a percentage of users who experience this bug as massive drops in performance unconnected to the rendering load in the game) for people who actually pay for their games? This is really not good. Not good at all. Rise running at 1080p30 is fine (it's a nice looking game). It going wrong and running at 8fps (for a scene that runs fine normally) is not, especially if it;'s the anti-piracy stuff that's broken and causing the issue.

Please Log In to post.

This edit will also create new pages on Giant Bomb for:

Beware, you are proposing to add brand new pages to the wiki along with your edits. Make sure this is what you intended. This will likely increase the time it takes for your changes to go live.Comment and Save

Until you earn 1000 points all your submissions need to be vetted by other Giant Bomb users. This process takes no more than a few hours and we'll send you an email once approved.

Log in to comment