Video Games vs. The Movies

By CairnsyTheBeard 20 Comments

Certain elements of some (mainly AAA) games make me believe that they are trying to ape cinema in terms of presentation and execution, such as Battlefield 4, Call of Duty: Advanced Warfare and The Order 1886. Some of the typically film-ey things they get up to include lens flares, motion blur and "immersive" anamorphic aspect ratios. Some of these ideas make sense but others don't. For example, I can understand why Resident Evil used camera angles as a way of constructing tension and getting around the technical limitations of the console at the time... but not why I can become blinded by a lens flare in Battlefield 4, a first person human simulator.

What is the motivation for the needless and negative aspects of typically cinema centric techniques to be often needlessly added to a perfectly fine formula? Is it for sheer presentation? Do devs or publishers think their game would advertise and sell better if they marketed it to look aesthetically as close as possible to cinema to appeal to a wider audience? Would aunt Maud notice the lens flare in an advertisement for the next Battlefield and be enticed to purchase the game? What about the players? Do we like a game more if lens flares pop up? Or if be look down our weapon and the foreground blurs. Or if we see an enemy move fast and appear super blurred?

Some aspects of what games have taken form cinema can be positive if used correctly and sparingly in the right places, contexts and genres but their conclusion can seen odd sometimes.

Lens Flares

A lens flares only occur within the lens of a camera, not within the human eye. This makes it's addition questionable (if admittedly pedantic to criticize) within "immersive" first person games like Battlefield. It's arguable that a certain aesthetic is trying to be achieved in games like Halo, a sci-fi where lens flares invoke footage of stars, space, The Star Trek reboot and shiny future stuff (and I guess he is wearing a helmet). However i cant help but feel it's all in excess, especially when it's a damn third person game like Arkham: Origins! Was I a camera all along? It can add up to feel cheesy and fake, not to mention at times even effect gameplay when you need to bloody see. So use lens flares where they make literal or artistic sense but not when they don't, and all used sparingly!

Depth of Field

This is a personal gripe of mine in lots of games, not only from a pedantic immersion and realism point of view but from a gameplay perspective too. See, the thing is, is, I create my own depth of field whenever i look at something, whether it be in the foreground or distance. When I'm watching a movie, looking through the directors eye, the film is telling me where to look by using techniques like camera angles, cuts, and depth of field. I'm supposed to be looking at the fore/background or if there is no depth of field I'm being directed to look at everything because it has significance.

In games a varying degree of control is given to the player, often including different planes of vision (fore & background) and camera control. I like depth of field when it's used well like in Limbo, it really adds to the atmosphere and isolating feeling of the game and crucially the player does not control the plane of vision. In the case of Limbo the developers are like the director, you're supposed to only look at the foreground where the boy is.

Where I object to DoF is in games like Call of Duty where my gun and nearby objects become blurred when I use my sights. Now i get it, the devs are going for a (ugh...) cinematic look and it's realistic in the sense that you would not be able to focus on your gun when held near your face, it may even affect gameplay balance although they don't mind it being disabled on PC, however it IS distracting because I'm creating my own depth of field by choosing to look at different areas of the screen. In a film I'm not meant to be looking bottom left when the focus is top right, but in a game i may need to look anywhere for a potential threat, I have to align my character's cross hair to where my eyes are looking to create the correct depth of field. That is not only immersion breaking but can affect gameplay when every millisecond is important. An interesting exception would be a game like Alien Isolation where no extreme accuracy is needed and the blurring of the background when using the motion tracker creates genuine tension and acts as a positive gameplay trade-off!

Motion Blur

In some games motion blur is used as a cheap filler to paste over the cracks (which are a less than optimal frame rate) for example in a lot of console games like Halo Reach. Other games use it for effect to make the player feel like they are moving fast in a racing game or when Cyborg ninja displays his acrobatics. It's often implemented in different fashions too depending on the engine and desired effect. Examples of needless or poor motion blur include Mass Effect, Okami and Halo Reach. Halo's was bad as it wasn't disable-able. In a fast faced, precision based action game, you want no visual distraction, so it's kind of bad when you turn your view and the ENTIRE world blurs until you stop. It gives the illusion of a smoother running game in games that can't achieve that, but on the whole I'd appreciate a worse looking game that runs well devoid of motion blur more than one that looks stellar but runs bad and is covered up by blur.

Additionally there is no argument to be made that a camera movement based came with motion blur looks more "cinematic" because the way FILM motion blur works is vastly different to how games use it. A camera shoots typically at 24 frames per second, capturing light at those intervals creates motion when frames are run together. Not only is it a real byproduct but the movie watching experience is non-interactive, meaning it's perfectly comfortable to watch a film with blur at 24FPS but Okami gives me a headache.

Aspect Ratio

Films have used many different aspect ratio's, some very wide some very square, often not only chosen due to technical limitations but also for artistic reasons. Spielberg shot "Jurassic Park" in 1:85:1 which was relatively tall to accommodate and emphasize the size of the dinosaurs. Similarly "The Grand Budapest Hotel" used a variety of film making techniques including changing aspect ration to compliment it's wacky postmodern storytelling stlye. Modern TV's are 16:9 which fits most widescreen films well albeit often with some black bars. Games have always adopted the display output of the time which makes sense but few games have really experimented with what can be achieved by changing the proportions of the screen. An old horror movie like Night of the Living Dead is given a claustrophobic atmosphere due to its boxy 1:37:1. There's a problem when gameplay meets presentation though as games have to have room for a UI and use space efficiently so the player feels in control. A shoot em' up would be better suited to a tall ratio but a FPS' wide field of vision would better suit widescreen.

There have been debates for years about changing the original aspect ratio of films/TV but I vehemently agree that they shouldn't be altered, especially if the image is cropped to exclude part of the frame or stretched to fill the frame, but even when the film was shot in widescreen but never intended to be seen that way, then released in widescreen (e.g. The Shield, The Wire HD Remaster).

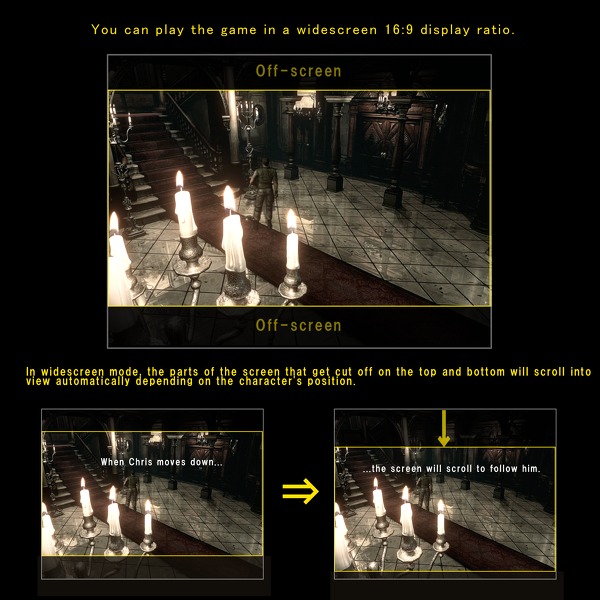

I think games as an interactive medium should be changed to suit the player. The recent Resident Evil Remake Remake demonstrates this well. There is an option for the original 4:3 ratio which I not only stick to because it looks better because the fixed camera angles were framed for that aspect ratio, but also because of the gameplay advantage of having more screen space and seeing zombies creep up on me. It is disappointing when developers use the excuse that they are going for a "cinematic feel" with widescreen when all they are really doing is trying to cut down on pixels to push the detail levels and maintain a stable frame-rate. Even if they were going for a deliberate widescreen ratio, that doesn't make much sense because unlike cinema, all TV's today are 16:9 or 16:10 so they would be deliberately making a product that doesn't fit with modern displays!

That's not to say I'm all against games using different ratio's but in the case of games like The Order It's the deceitfulness combined with lack of player choice and the wrong context used to display a certain ratio (that of a third person action shooter that already has a restricted view and would benefit from more space). Good use of widescreen ratios are adopted for cut-scenes to tell the player when there is a non-interactive sequence and this works quite well. So it is the player's choice to fill the screen of their TV, it should also be their choice as to what buttons to assign to the controls, the FOV and the sound levels, all things that in movies is best left to the director and editors.

Cut-Scenes

Cut-scenes were a natural evolution of storytelling in games as a restriction of the graphics limited the ability to express emotion in characters or have elaborate scenes that no engine could render. It was also natural because of hundreds of years of cinema to just slot in a scene and have players understand what's going on. these days cut scenes can feel like a bit of a cop out to me. not always, I enjoy the cut-scenes in Halo and the cinematics in Blizzards games. But since games like Half Life mastered the art of telling a story without the need to change the perspective, take you out of the character or gameplay and instead keep you immersed I've become less fond of them for certain genres. CGI cinematics are the worst because they don't represent the game at all which is problematic in advertising. My friends might see an amazing CGI trailer for the new Assassin's Creed and think "wow", but I see past that at this point and think there will be lots of towers, climbing and tailing and less dub-step, martial arts and striking camera angels.

I am a fan of in-engine cut scenes if the game must have cut scenes but again, if there is a better way to tell the story in the game then a cut-scene may be a nice addition or distraction. Uncharted and Gears of War have good in engine cut scenes that don't take you out of the experience and as they are third person and their tone or vibe fits the scenes well. But for more seriously story centric experiences, what games like Shadow of the Colossus, Journey and Half-Life have shown me is that there is so much potential for storytelling within the game world using the tools the player is given. Heck even the most linear storytelling segment (the bridge collapse from the beginning of Half-Life 2: Episode 2 springs to mind) is made more exciting when the player is simple allowed to continue to play within the world. Anything could still happen, in cut-scenes you know you are safe. It would be nice if developers could give players more freedom to experience the story the way they want because you're not going to convince someone who is only there to shoot guys in Uncharted to sit though cut scenes, but that same person might pay attention if control of the game wasn't relinquished from them and they was given the responsibility to pay attention or ignore as they choose.

Fin

The influence of cinema in games in undeniable and not always positive, from irritating quirks to things that make perfect sense and enhance the artistic vision of the developer. I just which a little more care would be put into the decision behind some features and that more overall control would be offered over said features to players. being able to turn off motion blur, depth of field would let people experience the interactive game the way they want (Something important to me as a primarily PC player). This is more of a big deal because these things affect gameplay and are not simply an artistic addition so the argument of "oh, its they way the developer wanted you to experience it" doesn't hold. If more games integrated the best features of cinema that make sense to implement into the gameplay itself then there would be more variety when it comes to the mainstream AAA gaming world.